Spotted at the science Gallery at King’s College, London, this week (open to the public).

A new ‘map’ is coming, hopefully next week. In my humble opinion the best one yet, certainly from an art/science collaboration perspective.

I was at the Web Summit last week in Lisbon. I’ve never seen quite so many black North Face rucksacks in one place, although black faces were almost totally absent. OK, there was some refreshing energy and optimism, but also quite a bit of delusion (in my opinion) surrounding future AI and the inevitability of conscious machines.

But the best bit was, without doubt, the sustainable merchandise. Hand-knitted jumpers for £800 and re-useable drink containers. Heaven forbid that any of the 70,000 attendees used a single use coffee cup. These, of course, were all sold alongside the fact that tech uses around 15-20 per cent of global energy (depending on whom you believe) and has a carbon footprint that would put BP and Boeing to shame (See a good article on the carbon footprint of AI here).

Clearly any industry will create emissions, especially during any transition to clean energy, but what gets my goat is how certain groups and individuals have focussed on one area (e.g. flying) at the total exclusion of others. For example, emissions from the global fashion industry and textile industries match and possibly exceed aviation.

BTW, if anyone has a reliable figure for carbon emissions created by Apple, Facebook and Uber et al – but especially Google and Amazon (incl. AWS) please share!

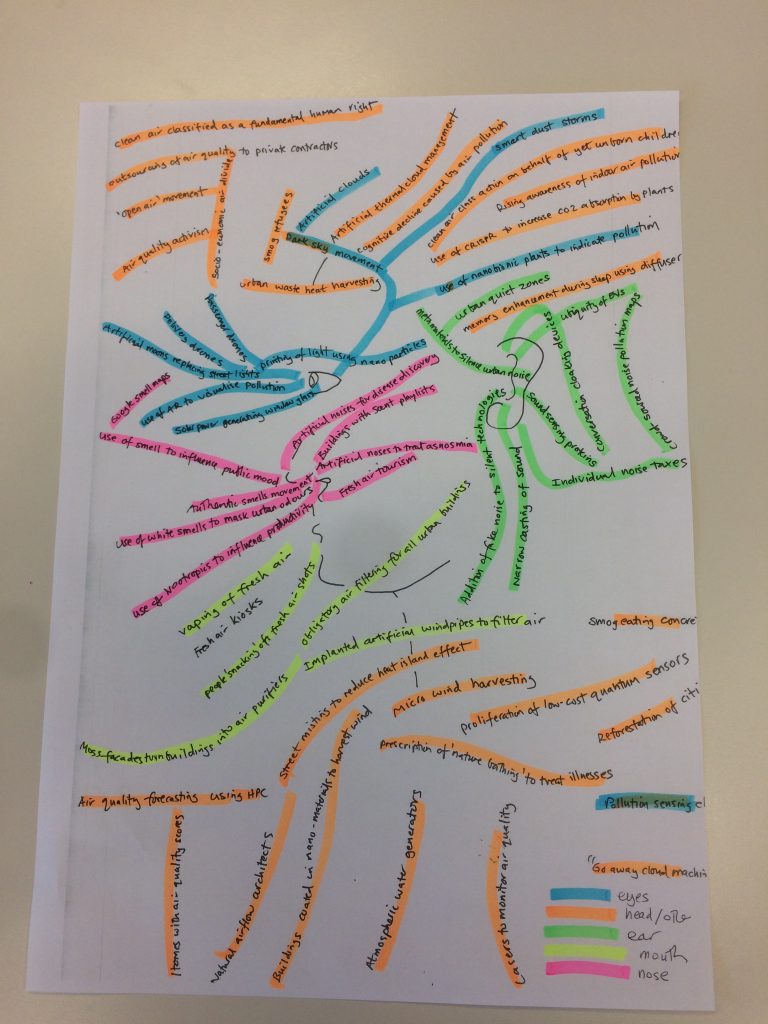

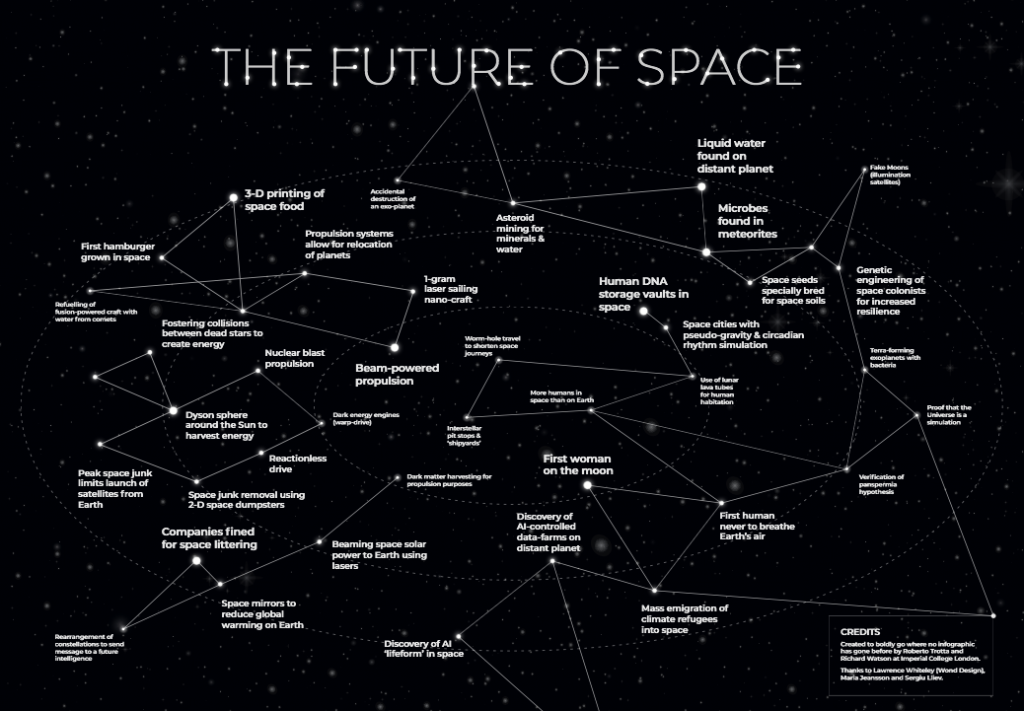

Here’s a visual showing some of the things that might theoretically be possible in the distant future. Note that all the ideas listed here are possible from a laws of physics point of view, although the engineering challenges and costs associated with most of them are, shall we say, a bit ‘far out’.

The way this works is that the ideas that are closest (larger type) are nearer than the things far away (in smaller type). There’s some vague clustering of ideas too (for example, human habitation, propulsion, water and so on).

The ideas are as follows:

First woman on the moon

First human never to breathe Earth’s air

Mass emigration of climate refugees into space

More humans in space than on Earth

Use of lunar lava tubes for human habitation

Space cities with pseudo-gravity & circadian rhythm simulation

Discovery of AI-controlled data-farms on distant planet

Discovery of AI ‘lifeform’ in space

Verification of panspermia hypothesis

Proof that the Universe is a simulation

Genetic engineering of space colonists for increased resilience

Terra-forming exoplanets with bacteria

Use of Synthetic biology to detoxify space soil for agriculture

Space seeds specially bred for space soils

Human DNA storage vaults in space

Microbes found in meteorites

Liquid water found on distant planet

Asteroid mining for minerals & water

Refuelling of fusion-powered craft with water from comets

3-D printing of space food

First hamburger grown in space

3D & 4-D printing of space cities in-situ

Self-reconfiguring modular robots for space-based construction

Peak space junk limits launch of satellites from Earth

Space junk removal using 2-D space dumpsters

Companies fined for space littering

1-gram laser sailing nano-craft

Interstellar pit stops & ‘shipyards’

Worm-hole travel to shorten space journeys

Nuclear blast propulsion

Beam-powered propulsion

Reactionless drive

Dark matter harvesting for propulsion purposes

Dark energy engines (warp-drive)

Dyson sphere around the Sun to harvest energy

Fostering collisions between dead stars to create energy

Propulsion systems allow for relocation of planets

Accidental destruction of an exoplanet

Rearrangement of constellations to send message to a future intelligence

Space mirrors to reduce global warming on Earth

Beaming space solar power to Earth using lasers

Fake moons (illumination satellites)

This chart was created to boldly go where no infographic has gone before by Professor Roberto Trotta and Richard Watson at Imperial College London. Thanks to Lawrence (Wond Design), Maria (Imperial Tech Foresight) and Sergiu (ex Imperial, aerospace engineering). If NASA eventually read this, the answer is yes! Printable PDF is coming…

More on this tomorrow, hopefully, along with a printable PDF.

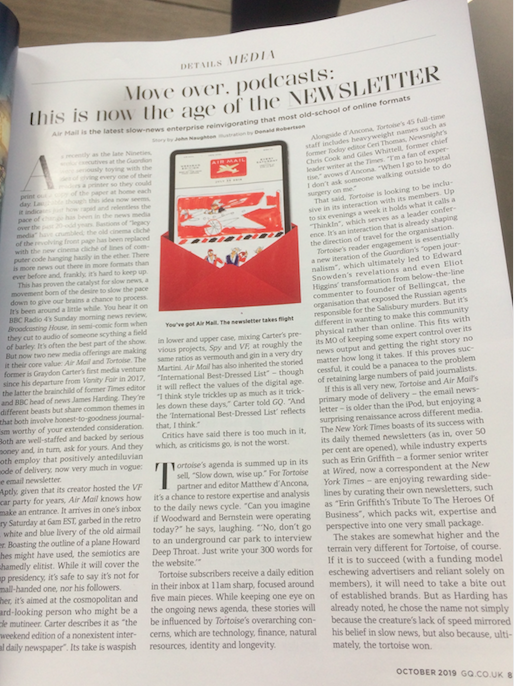

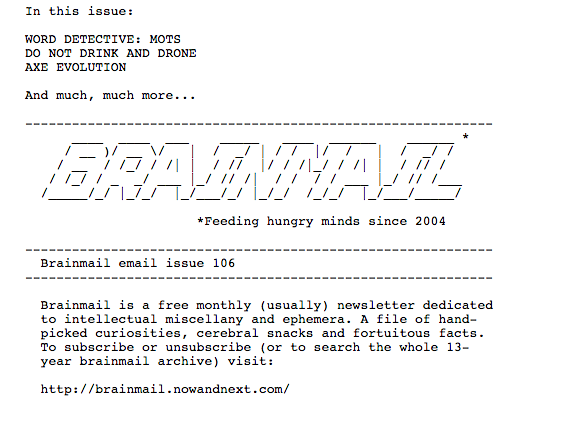

Here’s something I’ve discovered. Many things move up and down and in some cases round and round. Therefore, if you have an idea that’s off-trend, or against conventional wisdom, the best thing to do in some instances is simply wait. Case in point my own low-fi newsletter called brainmail. It ran for about 12-years, but I eventually killed it off due to GDPR amongst other things. But it now looks like a hot new idea.

What’s that Confucius, or some other old dude, once said? Something like if you sit on a river bank and wait for long enough, the body of your enemy will eventually float past. BTW, sitting by the water in Lisbon, at the Web Summit, this thought feels especially true.

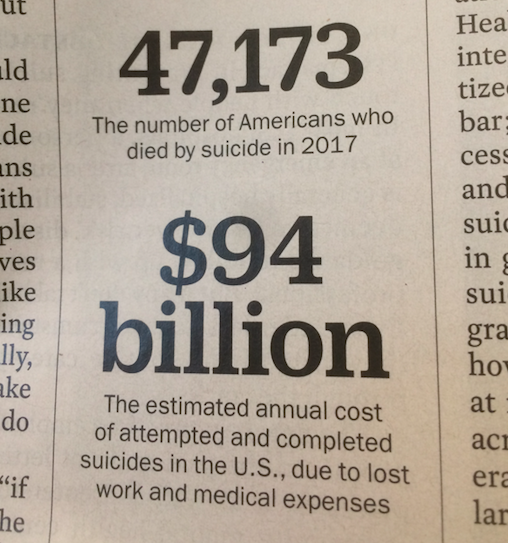

You might think that the cost of suicide, especially the cost to work, might be the last thing people would be thinking about.

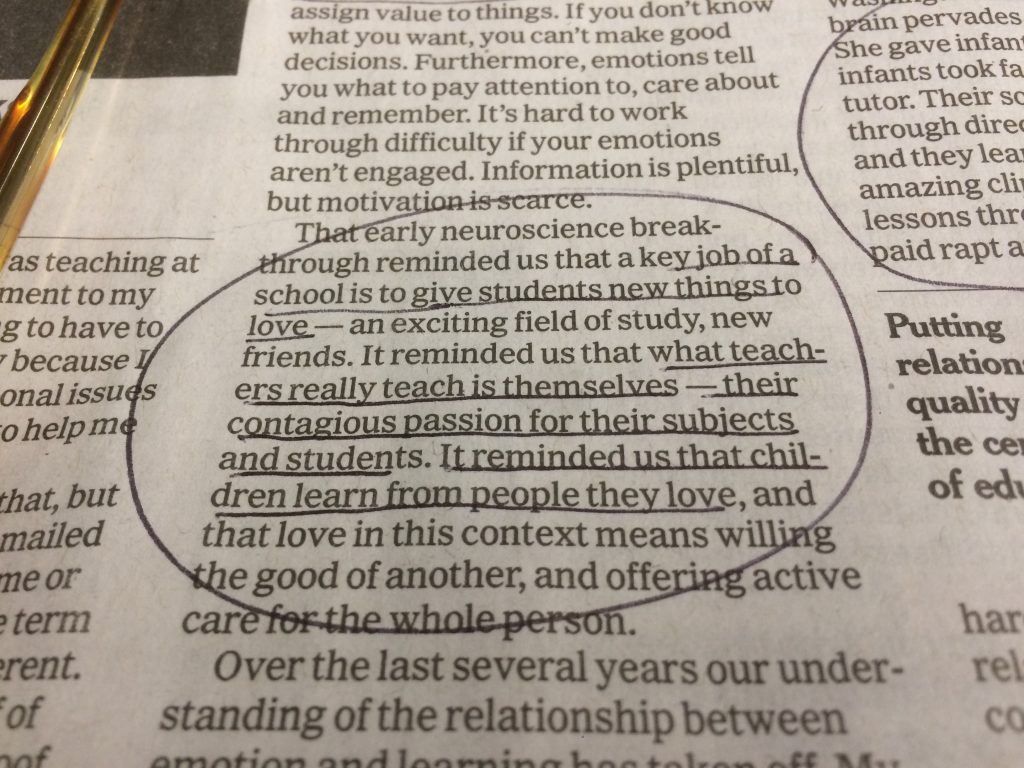

I may have posted this before (I’m getting old and forgetful). From the New York Times