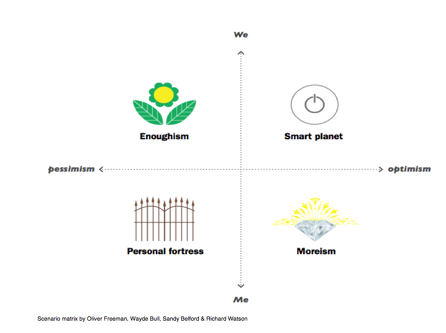

The Observer newspaper recently asked a group of experts for some predictions for what might happen over the next 25 years. Here’s some extracts from the predictions, together with my own comments. Thanks to Bradley who sent me the original link to this article. BTW, the original article came out on 2 January 2011, but many of the predictions refer to the year 2035, which would explain why 2011 + 25 = 2035.

http://www.guardian.co.uk/society/2011/jan/02/25-predictions-25-years

1 ‘Rivals will take greater risks against the US’

“The 21st century will see technological change on an astonishing scale. It may even transform what it means to be human. But in the short term – the next 20 years – the world will still be dominated by the doings of nation-states and the central issue will be the rise of the east” –Ian Morris (See my post January 14) Professor of history at Stanford University and the author of Why the West Rules – For Now

Comment

I totally agree. And, to quote another author, Michael Mandelbaum, “The one thing worse than an America that is too strong, the world will learn, is an America that is too weak”, However, remember that the US is probably more resilient than many people imagine and China has more problems than many can see. I also agree that while science & technology make for great predictions (and scenarios) the most practical things you can look at are a few key indicators, such as demographics, energy, food and so on – all of which impact nation states.

2 ‘The popular revolt against bankers will become impossible to resist’

“The popular revolt against bankers, their current business model in which neglect of the real economy is embedded and the scale of their bonuses – all to be underwritten by bailouts from taxpayers – will become irresistible.” – Will Hutton, executive vice-chair of the Work Foundation and an Observer columnist

Comment

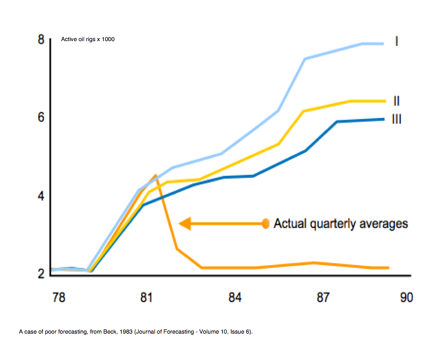

I came up with the same prediction back in late 2006, so I agree, but I don’t. At the moment this looks certain, but Hutton is making the classic mistake of extrapolating from the present. Banker bashing is a knee-jerk reaction. Moreover, it is too simplistic. What about the people that borrowed the money from the banks? Or what about the fact that interest rates have been at such low levels? For many people things aren’t that bad. I believe that the issue of banks will remain for a short period, but we will become concerned about something else in due course and the issue of bankers and banker bonuses will fade away. Unless, of course, there is indeed a second crisis of similar proportions, in which case things will change (but we will need a bigger crisis to create real shifts).

3 ‘A vaccine will rid the world of Aids’

“We will eradicate malaria, I believe, to the point where there are no human cases reported globally in 2035. We will also have effective means for preventing Aids infection, including a vaccine. With the encouraging results of the RV144 Aids vaccine trial in Thailand, we now know that an Aids vaccine is possible.” – Tachi Yamada, president of the global health programme at the Bill & Melinda Gates Foundation

Comment

I don’t know enough about this area to comment, so I won’t.

4 ‘Returning to a world that relies on muscle power is not an option’

“The challenge is to provide sufficient energy while reducing reliance on fossil fuels, which today supply 80% of our energy (in decreasing order of importance, the rest comes from burning biomass and waste, hydro, nuclear and, finally, other renewables, which together contribute less than 1%). Reducing use of fossil fuels is necessary both to avoid serious climate change and in anticipation of a time when scarcity makes them prohibitively expensive….disappointingly, with the present rate of investment in developing and deploying new energy sources, the world will still be powered mainly by fossil fuels in 25 years and will not be prepared to do without them.” – Chris Llewellyn Smith, former director general of Cern and chair of Iter, the world fusion project.

Comment

Bad choice of headline, but I agree with the prediction. In 25 years coal, oil and gas will still produce the vast majority (70-80%) of the world’s energy. Nuclear will be more important in some, but not all, regions. As for renewable energy, the issue is scale. Solar looks promising for some countries, but wind, wave and geothermal just don’t cut it as global solutions in my view. There is the possibility of some ‘magic bullet’ technology, but I think this will only happen when the cost of fossil fuels becomes so great we are forced to really do something.

5 ‘All sorts of things will just be sold in plain packages’

“In 25 years, I bet there’ll be many products we’ll be allowed to buy but not see advertised – the things the government will decide we shouldn’t be consuming because of their impact on healthcare costs or the environment, but that they can’t muster the political will to ban outright. So, we’ll end up with all sorts of products in plain packaging with the product name in a generic typeface-as the government is currently discussing for cigarettes.” – Russell Davies, head of planning at the advertising agency Ogilvy and Mather

Comment

I disagree. There will be more warnings – on everything from alcohol and confectionary to credit cards and cars, but I don’t agree with the plain wrapper theory.

6 ‘We’ll be able to plug information streams directly into the cortex’

“By 2030, we are likely to have developed no-frills brain-machine interfaces, allowing the paralysed to dance in their thought-controlled exoskeleton suits. I sincerely hope we will not still be interfacing with computers via keyboards, one forlorn letter at a time….I’d like to imagine we’ll have robots to do our bidding. But I predicted that 20 years ago, when I was a sanguine boy leaving Star Wars, and the smartest robot we have now is the Roomba vacuum cleaner. So I won’t be surprised if I’m wrong in another 25 years. AI has proved itself an unexpectedly difficult problem.” – David Eagleman, neuroscientist and writer

Comment

Best prediction on the list, not because I necessarily agree with it, but because it’s so provocative (that’s what predictions are for, surely?). Personally, I think this is all highly likely except for the bit about robots and the thought of downloading data directly into your mind – or of uploading your mind into a machine. We will have direct brain-to-machine interfaces (we already do – I’ve got one), which is much the same as mind control. We will ask our computers questions and they will answer back. The mouse and the keyboard will be long gone and machines will have some kind of emotional intelligence (they will know what mood you are in, for instance or they will be programmed to be afraid of doing certain things). However, I believe that the inventions the geeks really want (general AI or the ability to stream data directly into the cortex) are impossible for the foreseeable future.

7 ‘Within a decade, we’ll know what dark matter is’

“The next 25 years will see fundamental advances in our understanding of the underlying structure of matter and of the universe. At the moment, we have successful descriptions of both, but we have open questions. For example, why do particles of matter have mass and what is the dark matter that provides most of the matter in the universe?” – John Ellis, theoretical physicist at Cern and King’s College London

Comment

A 50% chance of this coming true. The real question, the biggest question of them all perhaps, is where we’ll we go from there? Personally, I find the idea of where the galaxies came from and where they (we) are going over the much longer term quite fascinating.

8 ‘Russia will become a global food superpower’

“By the middle of that decade (2035), therefore, we will either all be starving, and fighting wars over resources, or our global food supply will have changed radically. The bitter reality is that it will probably be a mixture of both….in response to increasing prices, some of us may well have reduced our consumption of meat, the raising of which is a notoriously inefficient use of grain. This will probably create a food underclass, surviving on a carb – and fat-heavy diet, while those with money scarf the protein… Russia will become a global food superpower as the same climate change opens up the once frozen and massive Siberian prairie to food production.” – Jay Rayner, TV presenter and the Observer’s food critic

Comment

Disagree. First there are two big assumptions here. The first involves climate change and global warming. Personally, I think one should remain open about some of the predictions on this subject and look at several scenarios, including global cooling.

If cooling were to happen things could look very nasty for Russia. Second, I think the timescale of 2036 is too short for this prediction and, third, Russia has some other issues, especially demography and health, to focus on before they worry about becoming a food superpower. BTW, Rayner says population will be nudging 9 billion by 2035. I think that could be wrong. I think it’s 2050.

9 ‘Privacy will be a quaint obsession’

“Some, like the futurist Ray Kurzweil, predict that nanotechnology will lead to a revolution, allowing us to make any kind of product for virtually nothing; to have computers so powerful that they will surpass human intelligence; and to lead to a new kind of medicine on a sub-cellular level that will allow us to abolish ageing and death…I don’t think that Kurzweil’s “technological singularity” – a dream of scientific transcendence that echoes older visions of religious apocalypse – will happen. Some stubborn physics stands between us and “the rapture of the nerds”. But nanotech will lead to some genuinely transformative applications. “- Richard Jones, pro-vice-chancellor for research and innovation at the University of Sheffield

Comment

I don’t see the relationship here between the prediction (the headline) and the text of the story. Yes, nanotech has privacy implications, but they are nothing compared to what the internet is doing – or possibly genetics. Do I agree with the prediction about privacy being deleted? I haven’t made my mind up yet.

10 Gaming: ‘We’ll play games to solve problems’

“In the last decade, in the US and Europe but particularly in south-east Asia, we have witnessed a flight into virtual worlds, with people playing games such as Second Life. But over the course of the next 25 years, that flight will be successfully reversed, not because we’re going to spend less time playing games, but because games and virtual worlds are going to become more closely connected to reality.” – Jane McGonigal, director of games research & development at the Institute for the Future in California

Comment

I agree (but taken too far I don’t like the idea)

11 ‘Quantum computing is the future’

“The open web created by idealist geeks, hippies and academics, who believed in the free and generative flow of knowledge, is being overrun by a web that is safer, more controlled and commercial, created by problem-solving pragmatists. Henry Ford worked out how to make money by making products people wanted to own and buy for themselves. Mark Zuckerberg and Steve Jobs are working out how to make money from allowing people to share, on their terms… By 2035, the web, as a single space largely made up of webpages accessed on computers, will be long gone… and by 2035 we will be talking about the coming of quantum computing, which will take us beyond the world of binary, digital computing, on and off, black and white, 0s and 1s.” – Charles Leadbeater, author and social entrepreneur.

Comment

I don’t think this is saying very much.

12 Fashion: ‘Technology creates smarter clothes’

“Technology is already being used to create clothing that fits better and is smarter; it is able to transmit a degree of information back to you. This is partly driven by customer demand and the desire to know where clothing comes from – so we’ll see tags on garments that tell you where every part of it was made, and some of this, I suspect, will be legislation-driven, too, for similar reasons, particularly as resources become scarcer and it becomes increasingly important to recognise water and carbon footprints.” – Dilys Williams, designer and the director for sustainable fashion at the London College of Fashion

Comment

Define smart? I see a polarization between high-tech fashion (wearable computers, smart fabrics, global brands) and sustainable clothing (often locally sourced and highly functional).

13 Nature: ‘We’ll redefine the wild’

“I’m confident that the charismatic mega fauna and flora will mostly still persist in 2035, but they will be increasingly restricted to highly managed and protected areas….. Increasingly, we won’t be living as a part of nature but alongside it, and we’ll have redefined what we mean by the wild and wilderness…Crucially, we are still rapidly losing overall biodiversity, including soil micro-organisms, plankton in the oceans, pollinators and the remaining tropical and temperate forests. These underpin productive soils, clean water, climate regulation and disease-resistance. We take these vital services from biodiversity and ecosystems for granted, treat them recklessly and don’t include them in any kind of national accounting.” – Georgina Mace, professor of conservation science and director of the Natural Environment Research Council’s Centre for Population Biology, Imperial College London

Comment

Sounds perfectly reasonable to me.

14 Architecture: What constitutes a ‘city’ will change

“In 2035, most of humanity will live in favelas. This will not be entirely wonderful, as many people will live in very poor housing, but it will have its good side. It will mean that cities will consist of series of small units organised, at best, by the people who know what is best for themselves and, at worst, by local crime bosses…Cities will be too big and complex for any single power to understand and manage them. They already are, in fact. The word “city” will lose some of its meaning: it will make less and less sense to describe agglomerations of tens of millions of people as if they were one place, with one identity. If current dreams of urban agriculture come true, the distinction between town and country will blur.” – Rowan Moore, Observer architecture correspondent

Comment

Urban agriculture is pure fantasy in my view, especially vertical farming. I agree that in 25 years most people will probably live in poor housing but it will probably be an improvement on what people lived in 25 years ago.

Most people will definitely live in cities, but I suspect that with the exception of a handful of iconic individual buildings, office complexes and retail developments, most people will continue to live in what we, living in 2011, would immediately recognize as cities.

15 Sport: ‘Broadcasts will use holograms’

“Globalisation in sport will continue: it’s a trend we’ve seen by the choice of Rio for the 2016 Olympics and Qatar for the 2022 World Cup. This will mean changes to traditional sporting calendars in recognition of the demands of climate and time zones across the planet…Sport will have to respond to new technologies, the speed at which we process information and apparent reductions in attention span. Shorter formats, such as Twenty20 cricket and rugby sevens, could aid the development of traditional sports in new territories.” – Mike Lee, chairman of Vero Communications and ex-director of communications for London’s 2012 Olympic bid.

Comment

I am just about to start work looking at the future of sport so it’s a bit early to really comment on this one. Yes, the globalization of sport will probably continue but we should not underestimate counter-trends (e.g. local or ‘real football’ as an alternative to global football brands with mega-budgets). Yes to new technologies too and I certainly agree with short format sport as a reaction to shorter attention spans (Golf is next for the short-format treatment apparently).

16 Transport: ‘There will be more automated cars’

“It’s not difficult to predict how our transport infrastructure will look in 25 years’ time – it can take decades to construct a high-speed rail line or a motorway, so we know now what’s in store. But there will be radical changes in how we think about transport. The technology of information and communication networks is changing rapidly and internet and mobile developments are helping make our journeys more seamless. Queues at St Pancras station or Heathrow airport when the infrastructure can’t cope for whatever reason should become a thing of the past.” – Frank Kelly, professor of the mathematics of systems at Cambridge University.

Comment

Agree with the first bit, but good luck with the point about queues and seamless journeys. First, I think he could be overestimating the impact of technology and secondly (more importantly) he is neglecting economics. In the West especially governments are burdened by debt and rising urban populations and it’s quite possible that much public infrastucture will be in very poor repair by 2036.

17 Health: ‘We’ll feel less healthy’

“Health systems are generally quite conservative. That’s why the more radical forecasts of the recent past haven’t quite materialised. Contrary to past predictions, we don’t carry smart cards packed with health data; most treatments aren’t genetically tailored; and health tourism to Bangalore remains low. But for all that, health is set to undergo a slow but steady revolution. Life expectancy is rising about three months each year, but we’ll feel less healthy, partly because we’ll be more aware of the many things that are, or could be, going wrong, and partly because more of us will be living with a long-term condition.” – Geoff Mulgan, chief executive of the Young Foundation

Comment

Agreed.

18 Religion: ‘Secularists will flatter to deceive’

“Organised religions will increasingly work together to counter what they see as greater threats to their interests – creeping agnosticism and secularity… I predict an increase in debate around the tension between a secular agenda which says it is merely seeking to remove religious privilege, end discrimination and separate church and state, and organised orthodox religion which counterclaims that this would amount to driving religious voices from the public square.” – Dr Evan Harris, author of a secularist manifesto

Comment

Fascinating subject (I’d love to do some scenarios on the future of religion). I agree with some of this. I think that the world will become more religious over the next 25 years but the idea that secularization will grow is potentially suspect.

19 Theatre: ‘Cuts could force a new political fringe’

“Student marches will become more frequent and this mobilisation may breed a more politicised generation of theatre artists. We will see old forms from the 1960s re-emerge (like agit prop) and new forms will be generated to communicate ideology and politics.” – Katie Mitchell, theatre director.

Comment

Disagree. Yes, I this could happen, but I think a much stronger trend could be quite the opposite. If the economy (in the West) fails to pick up and we enter a prolonged period of doom and gloom, I would predict that people will get so sick and fed up that sugary, escapist, nostalgic fantasies and musicals with thrive.

20 Storytelling: ‘Eventually there’ll be a Twitter classic’

“Twenty-five years from now, we’ll be reading fewer books for pleasure. But authors shouldn’t fret too much; e-readers will make it easier to impulse-buy books at 4am even if we never read past the first 100 pages…My guess is that, in 2035, stories will be ubiquitous. There’ll be a tube-based soap opera to tune your iPod to during your commute, a tale (incorporating on-sale brands) to enjoy via augmented reality in the supermarket. Your employer will bribe you with stories to focus on your job. Most won’t be great, but then most of everything isn’t great – and eventually there’ll be a Twitter-based classic.” – Naomi Alderman, novelist and games writer

Comment

I’d predict that in 25 years Twitter won’t exist (and I’ll take a bet on that).