Monthly Archives: June 2016

EU referendum result

Retail Trends

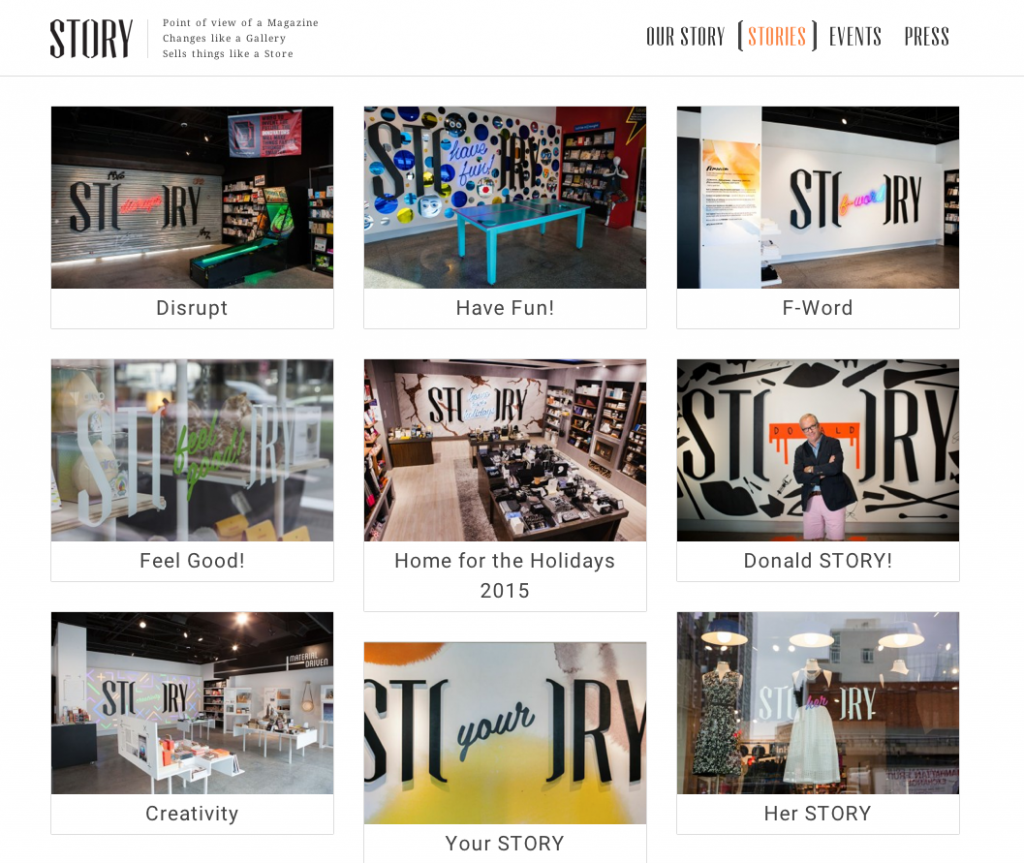

In my new book Digital Vs. Human (buy here) I featured a ‘future flash’ about a new kind of store. Seems someone has beaten me to it. Here’s my fictional flash…

1 June 2020

I’ve been thinking about shops, partly because I remembered what Theodore was saying about retailers generally missing the point about why people go shopping. He suggested that people partly shop to get out of the solitude and boredom that is their own home. Shopping is therefore, in varying degrees, social and is often more about the other human beings people meet and interact with than the things they buy.Historically, shopping was once very social. Half of all London shops once took in lodgers and many, if not most, Parisian shops were located beneath flats or inside houses.

So here’s my idea for a new kind of shop. It would be called 5 Things to Change Your Life. Each month the shop would curate 5 items that could change someone’s mind about something. For example, several copies of Dark Side of the Moon on Vinyl, a bottle of Chateau d’Yquem 1976, a dozen well thumbed copies of The Worst Journey in the World by Apsley Cherry-Garrard a pepper grinder that works properly and 48 hours of total silence at a monastic retreat.

But here’s the thing. The shop would openly seek conversations with its customers encouraging them to visit the shop to explain their choices to others. We would explain each item’s history and provenance, even providing the contact details of previous owners. The shop would also host events, including poetry readings, live music, cookery demonstrations and art exhibitions. And it would help people to exchange skills, find jobs and even marriage proposals too?

What do you think? Stick with Amazon?

Best, Nick.

And here’s the fact.

Story is a 2,000 square foot store set on Manhattan’s 10th Avenue that takes it’s point of view from a magazine. Namely, it changes with every ‘issue’ – usually every 6-8 weeks. The store was set up by Rachel Shechtman and follows a particular theme, trend or idea each time it reinvents itself.

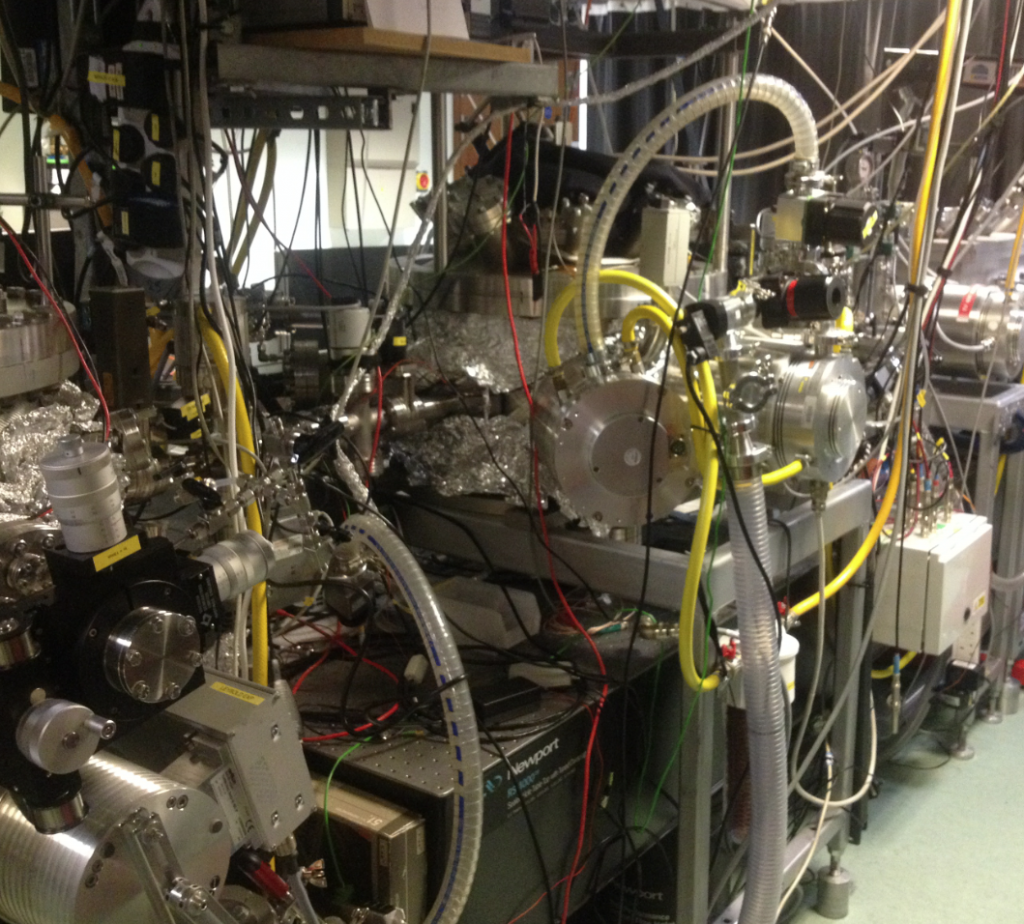

Mind benders

This is too good not to share. Having had my head scrambled by space-based lasers that can monitor the health of the Earth’s vegetation down to a single plant, I then stumbled into Attosecond physics (lab at Imperial above). What’s an attosecond I hear you ask? Well an attosecond is to a second what a second is the the age of the universe. Get your head around that. More here.

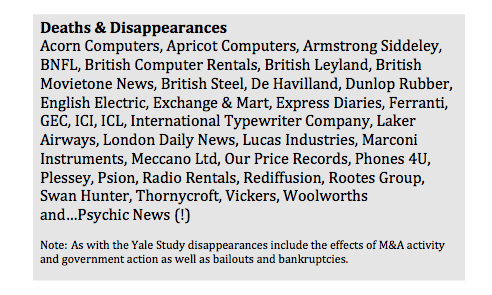

Why Companies Die

Why is it that the average lifespan of an S&P 500 company in the US has fallen from 67 years in the 1920s to just 15 years today? And why might 75% of firms in the S&P 500 now be gone or going by the year 2027 or thereabouts?

These figures come from a study by Richard Foster at the Yale School of Management and echo another from the Santa Fe Institute that found that publically quoted firms die at similar rates regardless of age or industry sector. In this second study the average lifespan of American companies was cited at just 10 years. The reason given for most of these companies dying or disappearing is a merger or acquisition.* A third US study** of S&P companies reports an average company age of 61 in 1958, 25 in 1980 and around 18 today, but the trend toward shorter lives remains regardless of which study you read.

In the UK it’s a similar story. Of the 100 companies in the FTSE 100 in 1984, only 24 were still breathing in 2012, although average corporate lifespans in the UK were somewhat longer. However, with start-ups it’s back to bleak, with almost 50% of SMEs failing to celebrate their 5th birthday. The reasons UK start-ups fail are said to include cash flow issues, a lack of bank lending, too much red tape, high business rates and competition.

With larger enterprises in the UK and elsewhere the situation can be somewhat different. The main reason that big companies die – beyond being consumed by larger or more aggressive companies – is that they fail to anticipate or react to new technology, new customer demands or competitors with new business models, products and services, all of which are often linked and can cause considerable disruption and disturbance.

This is Darwinian evolution applied to capitalism and the only solution is to keep your eyes and ears wide open for predators and to continually evolve what you do through a process of constant adaption and occasionally accelerated mutation.

The list of corporate casualties is certainly long. Most people are aware of how things developed at Kodak, but the list of companies killed off or seriously injured by new technologies, new competitors or new customer behaviours includes a roll call of previously proud British names including Ferranti, Psion, Acorn Computers, De Havilland, British Leyland, British Steel, ICI, Marconi, Swan Hunter, Armstrong Siddeley, GEC and ICL.

Interestingly, in each case the writing was on the wall long before many of these companies went bankrupt or were taken over, but if there’s one thing that you can rely on with big companies it’s that, like super-tankers, they can take a long time to change direction and the view from the bridge is often partially obscured or heavily contested.

Nothing recedes like Success

Putting to one side new technologies, new competition and new customer demands, a key point is geriatric corporate cultures. Bill Gates once said that “success is a lousy teacher, it seduces smart people into thinking they can’t lose.” In other words, nothing recedes quite like success and large companies can become delusional about their fitness, their intellect or the speed and energy with which new ideas and inventions can move.

If arrogance is one silent killer, another is that as companies grow and become bigger management can become distanced from both insight and innovation. Peter Drucker made this point decades ago, although he used the word entrepreneurship. Managing and innovating are different dimensions of the same task, but most large companies regard them as separate to the point of putting them in different departments or locations. As the urgency to stay alive financially evaporates the focus shifts away from urgent opportunities and threats to lethargic internal issues and a kind of corporate immune system develops whereby new ideas tend to be rejected by the corporate body the minute they form.

If you drop down the organisation chart to departments such as customer complaints this isn’t always the case. People working in customer relations, IT, sales or even accounts can be extremely close to customers, and hence to the inception of new ideas, but senior management often writes off these departments as cost centres rather than hotbeds of insight and innovation. With R&D it’s often much the same story with scientists and engineers being regarded as grey suited bureaucrats offering up ponderous improvements rather than white-coated warriors fighting for discoveries that could transform the company.

The culture of organisations contributes to failure in other ways too. The dominant culture of most very large companies is highly conservative and quite rightly so. Publically quoted firms primarily exist to provide a regular return to shareholders and to keep workers in regular employment. But to do both these things they must also deliver constantly evolving products that create value for customers.

This is like a tightrope that’s not only swaying in the wind, but is being constantly moved and adjusted at one end while you’re still walking along it. Interestingly, Mark Vergano, an executive VP at Du Pont once made a similar point with regard to R&D saying that: “Research and development is always a delicate balance between maintaining a long-term view and remaining sensitive to short-term financial objectives.”

To sum up, if companies wish to remain healthy and grow old they need to do two things. Firstly, they need to remain young at heart. They need to remain mentally agile, constantly learn new things and question their own identity and reason for being.

This means repeatedly asking what business they’re really in and how best they can serve both current and future customers using current and future technologies, channels and business models.

Secondly, companies must look at innovation from a whole business perspective and make innovation truly cross-functional. If innovation exists purely at a departmental, product or service level it’s unlikely to proceed beyond incremental refinement. Continuous improvement is essential, but it’s merely a ticket to stay in the game. To win the game companies must consider more radical developments including the ground up reinvention of everything they do and also link innovation to strategy.

Three quick ways to extend the lifespan of your company:

1. Constantly look out for and test new ideas that could breathe new life into your company or fatally wound it if applied by a competitor.

2. In a similar vein don’t simply keep an eye on what your closest competitors are doing, also study what small start-ups outside your immediate market or geography are doing too. Are they doing anything unusual? Are they doing anything that doesn’t immediately make sense? If so dig into why.

3. Keep a close eye on what your youngest customers and employees are doing. What are they doing that you aren’t? Could such behaviour be an early warning signal of change?

Footnotes.

* A merger or acquisition isn’t necessarily a business failure, but can nevertheless be a sign of weakness or long-term illness.

**Innosight study (US).

If you’re wondering, the world’s oldest limited liability corporation is Enso Stora, a Finnish paper and pulp manufacturer that started out as a mining company in 1288.

Quote of the week (about digital culture)

After yesterday’s essay something short.

“The complicated, ambiguous milieu of human contact is being replaced with simple, scalable equations. We maintain thousands more friends than any human being in history, but at the cost of complexity and depth.”

Daniel. H. Wilson, author of How to Survive a Robot Uprising.

Sensing the Futures (how to challenge ruling narratives)

Here’s a prediction. You are reading this because you believe that it’s important to have a sense of what’s coming next. Or perhaps you believe that since disruptive events are becoming more frequent you need more warning about potential game-changers, although at the same time you’re frustrated by the unstructured nature of futures thinking.

Foresight is usually defined as the act of seeing or looking forward – or to be in some way forewarned about future events. In the context of science and technology it can be interpreted as an awareness of the latest discoveries and where these may lead, while in business and politics it’s generally connected with an ability to think through longer-term opportunities and risks be these geopolitical, economic or environmental. But how does one use foresight? What practical tools are available for companies to stay one step ahead of the future and to deal with potential disruption?

The answer to this depends on your state of mind. In short, if alongside an ability to focus on the here and now you have – or can develop – a corporate culture that’s furiously curious, intellectually promiscuous, self-doubting and meddlesome you are likely to be far more effective at foresight than if you doggedly stick to a single idea or worldview. This is due to the fact that the future is rarely a logical extension of single ideas or conditions. Furthermore, even when it looks as though this may be so, everything from totally unexpected events, feedback loops, behavioural change, pricing, taxation and regulation have a habit of tripping up even the best-prepared linear forecasts.

Always look both ways

In other words, when it comes to the future most people aren’t really thinking, they are just being logical based on small sets of data or recent personal experience.

The future is inherently unpredictable, but this gives us a clue as to how best to deal with it. If you accept – and how can you not – that the future is uncertain then you must accept that there will always be numerous ways in which the future could play out. Developing a prudent, practical, pluralistic mind-set that’s not narrow, self-assured, fixated or over-invested in any singular outcome or future is therefore a wise move.

This is similar in some respects to the scientific method, which seeks new knowledge based upon the formulation, testing and subsequent modification of a hypothesis. The scientific method is perhaps best summed up by the idea that you should always keep an open mind about what’s possible whilst simultaneously remaining somewhat cynical.

Not blindly accepting conventional wisdom, being questioning and self-critical, looking for opposing forces, seeking out disagreement and above all being open to disagreements and anomalies are all ways of ensuring agility and most of all resilience in what is becoming an increasingly febrile and inconstant world.

This is all much easier said than done, of course. Homo sapiens are a pattern seeing species and two of the things we loathe are randomness and uncertainty. We are therefore drawn to forceful personalities with apparent expertise who build narrative arcs from a subjective selection of so called ‘facts’. Critically, such narratives can force linkages between events that are unrelated or ignore important factors.

Seeking singular drivers of change or maintaining a simple positive or negative attitude toward any new scientific, economic or political development is therefore easier than constantly looking for complex interactions or erecting a barrier of scepticism about ideas that almost everyone else appears to agree upon or accept without question.

Danger: hidden assumptions.In this context a systems approach to thinking can pay dividends. In a globalised, hyper-connected world, few things exist in isolation and one of the main reasons that long-term planning can go so spectacularly wrong is the over simplification of complex systems and relationships.

Another major factor is assumption, especially the hidden assumptions about how industries or technologies will evolve or how individuals will behave in relation to new ideas or events. The historical hysteria about Peak Oil might be a case in point. Putting to one side the assumption that we’ll need oil in the future, the amount of oil that’s available depends upon its price. If the price is high there’s more incentive to discover and extract more oil. A high oil price fuels a search for alternative energy sources, but also incentivises behavioural change at both an individual and governmental level. It’s not an equal and opposite reaction, but the dynamic tensions inherent within powerful forces means that balancing forces do often appear over time.

Thus we should always think in terms of technology plus psychology or one factor combined with others. In this context, one should also consider wildcards. These are forces that appear out of nowhere or which blindside us because we’ve discounted their importance. For example, who could have foreseen the importance of the climate climate debate in the early 2000s or its relative disappearance in the aftermath after the global financial crisis in 2007/8.

Similarly, it can often be useful to think in terms of future and past. History gives us clues about how people have behaved before and may behave again. Therefore it’s often worth travelling backwards to explore the history of industries, products or technologies before travelling forwards.

If hidden assumptions, the extrapolation of recent experience, and the interplay of multiple factors are three traps, cognitive biases are a fourth. The human brain is a marvellous thing, but too often it tricks us into believing that something that’s personal or subjective is objective reality. For example, unless you are aware of confirmation bias it’s difficult to unmake your mind once it’s made up. Back to Peak Oil and Climate Change scepticism perhaps.

Once you have formed an idea about something – or someone – your conscious mind will seek out data to confirm your view, while your subconscious will block anything that contradicts it. This is why couples argue, why companies steadfastly refuse to evolve their strategy and why countries accidently go to war. Confirmation bias also explains why we persistently think that things we have experienced recently will continue into the future. Similar biases mean that we stick to strategies long after they should have been abandoned (loss aversion) or fail to see things that are hidden in plain sight (inattentional blindness).

In 2013, a study in the US called the Good Judgement Project asked 20,000 people to forecast a series of geopolitical events. One of their key findings was that an understanding of these natural biases produced better predictions. An understanding of probabilities was also shown to be of benefit as was working as part of a team where a broad range of options and opinions were discussed. You have to be aware of another bias – Group Think – in this context, but as long as you are aware of the power of consensus you can at least work to offset its negative aspects.

Being aware of how people relate to one another also brings to mind the thought that being a good forecaster doesn’t only mean being good at forecasts. Forecasts are no good unless someone is listening and is prepared to take action.Thinking about who is and who is not invested in certain outcomes – especially the status quo – can improve the odds when it comes to being heard. What you say is important, but so too is whom you speak to and how you illustrate your argument, especially in organisations that are more aligned to the world as it is than the world as it could become.

Steve Sasson, the Kodak engineer who invented the world’s first digital camera in 1975 showed his invention to Kodak’s management and their reaction was: ‘That’s cute, but don’t tell anyone.” Eventually Kodak commissioned research, the conclusion of which was that digital photography could be disruptive. However it also said that Kodak would have a decade to prepare for any transition. This was all Kodak needed to hear to ignore it. It wasn’t digital photography per se that killed Kodak, but the emergence of photo-sharing and of group think that equated photography with printing, but the end result was much the same.

Good forecasters are good at getting other peoples’ attention through the use of narratives or visual representations. Just look at the power of science fiction, especially movies, versus that of white papers or power point presentations.

If the engineers at Kodak had persisted, or had brought to life changing customer attitudes and behaviours through the use of vivid storytelling – or perhaps photographs or film – things might have developed rather differently.

Find out what you don’t know

Beyond thinking about your own thinking and thinking through whom you speak to and how you illustrate your argument, what else can you do to avoid being caught on the wrong side of future history? According to Michael Laynor at Deloitte Research, strategy should begin with an assessment of what you don’t know, not with what you do. This is reminiscent of Donald Rumsfeld’s infamous ‘unknown unknowns’ speech.

“Reports that say that something hasn’t happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns – the ones we don’t know we don’t know….”

The language that’s used here is tortured, but it does fit with the viewpoint of several leading futurists including Paul Saffo at the Institute for the Future. Saffo has argued that one of the key goals of forecasting is to map uncertainties.

What forecasting is about is uncovering hidden patterns and unexamined assumptions, which may signal significant revenue opportunities or threats in the future.

Hence the primary aim of forecasting is not to precisely predict, but to fully identify a range of possible outcomes, which includes elements and ideas that people haven’t previously known about, taken seriously or fully considered. The most useful starter question in this context is: ‘What’s next?’ but forecasters must not stop there. They must also ask: ‘So what?’ and consider the full range of ‘What if?’

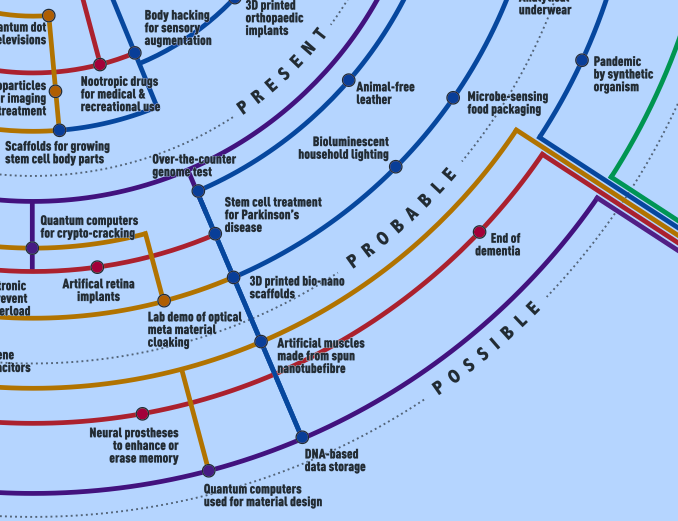

Consider the improbable

A key point here is to distinguish between what’s probable, and what’s possible. Sherlock Holmes said that: “Once you eliminate the impossible, whatever remains, no matter how improbable, must be the truth.” This statement is applicable to forecasting because it is important to understand that improbability does not imply impossibility. Most scenarios about the future consider an expected or probable future and then move on to include other possible futures. But unless improbable futures are also considered significant opportunities or vulnerabilities will remain unseen.

This is all potentially moving us into the territory of risks rather than foresight, but both are connected. Foresight can be used to identify commercial opportunities, but it is equally applicable to due diligence or the hedging of risk. Unfortunately this thought is lost on many corporations and governments who shy away from such long-term thinking or assume that new developments will follow a simple straight line. What invariably happens though is that change tends to follow an S Curve and developments have a tendency to change direction when counter-forces inevitably emerge.

Knowing precisely when a trend will bend is almost impossible, but keeping in mind that many will is itself useful knowledge. The Hype Cycle developed by Gartner Research is also helpful in this respect because it helps us to separate recent developments or fads (the noise) from deeper or longer-term forces (the signal). The Gartner diagram links to another important point too, which is that because we often fail to see broad context we have a tendency to simplify.

This means that we ignore market inertia and consequently overestimate the importance of events in the shorter term, whilst simultaneously underestimating their importance over much longer timespans.

An example of this tendency is the home computer. In the 1980s, most industry observers were forecasting a Personal Computer in every home. They were right, but this took much longer than expected and, more importantly, we are not using our home computers for word processing or to view CDs as predicted. Instead we are carrying mobile computers (phones) everywhere, which is driving universal connectivity, the Internet of Things, smart sensors, big data, predictive analytics, which are in turn changing our homes, our cities, our minds and much else besides.

Drilling down to reveal the real why

What else can you do to see the future early? One trick is to ask what’s really behind recent developments. What are the deep technological, regulatory of behavioural drivers of change? But don’t stop there.

Dig down beyond the shifting sands of popular trends to uncover the hidden bedrock upon which new developments are being built. Then balance this out against the degree of associated uncertainty.

Other tips might include travelling to parts of the world that are in some way ahead technologically or socially. If you wish to study the trajectory of ageing or robotics, for instance, Japan is a good place to start. This is because Japan is the fastest ageing country on earth and has been curious about robotics longer than most. Japan is therefore looking at the use of robots to replace people in various roles ranging from kindergartens to aged-care.

You can just read about such things, of course. New Scientist, Scientific American, MIT Technology Review, The Economist Technology Quarterly are all ways to reduce your travel budget, but seeing things with your own eyes tends to be more effective. Speaking with early adopters (often, but not exclusively younger people) is useful too as is spending time with heavy or highly enthusiastic users of particular products and services.

Academia is a useful laboratory for futures thinking too, as are the writings of some science fiction authors. And, of course, these two worlds can collide. It is perhaps no coincidence that the sci-fi author HG Wells studied science at what was to become Imperial College London or that many of the most successful sci-fi writers, such as Isaac Asimov and Arthur C. Clarke, have scientific backgrounds.

So find out what’s going on within certain academic institutions, especially those focussed on science and technology, and familiarise yourself with the themes the best science-fiction writers are speculating about.

Will doing any or all of these things allow you to see the future in any truly useful sense? The answer to this depends upon what it is that you are trying to achieve. If you aim is granular – to get the future 100% correct – then you’ll be 100% disappointed. However, if you aim is to highlight possible directions and discuss potential drivers of change there’s a very good chance that you won’t be 100% wrong. Thinking about the distant future is inherently problematic, but if you spend enough time doing so it will almost certainly beat not thinking about the future at all.

Moreover, creating the time to peer at the distant horizon can result in something more valuable than planning or prediction. Our inclination to relate discussions about the future to the present means that the more time we spend thinking about future outcomes the more we will think about whether what we are doing right now is correct. Perhaps this is the true value of forecasting: It allows us to see the present with greater far clarity and precision.

This is the first of three essays about foresight and thinking about the future written by Richard Watson for Tech Foresight at Imperial College. Part two is about the use of scenarios to anticipate change while part three is about the use – and misuse – of trends.

Illustrations:

Diagram 1: Timeline of emerging science & technology, Tech Foresight, Imperial College, 2015.

Diagram 2: Gartner Hype Cycle

Quote of the Week… and much, much more

So much to think about at the moment, which is why I’ve been rather absent of late. I’m working on something looking at the future of water, some provocation cards for a Tech Foresight 2016 event and something on risk for London Business School.

But more interesting than all of this is something I heard on the radio this morning. Don’t even ask why I was listening to Katy Perry but I was and a song called Part of Me came on. One of the lines was:

“I just want to throw away my phone away. Find out who is really there for me.”

Normally I wouldn’t pay much attention to this, but there’s another song I heard not so long ago by James Blunt with the line:

“Seems that everyone we know’s out there waiting by a phone wondering why they feel alone.”

And last week someone was telling me how students at an art college had abandoned ‘digital installations’ in favour of painting and drawing. Are we seeing some kind of rebalancing emerging here?

Oh, the quote…

“If we allow our self-congratulatory adoration of technology to distract us from our own contact with each other, then somehow the original agenda has been lost.” – Jaron Lanier.

Me My Selfie and I

This is from Aeon magazine and worth repeating in full in my view.

I’m having dinner with my flatmates when my friend Morgan takes a picture of the scene. Then she sits back down and does something strange: she cocks her head sideways, crosses her eyes, and aims the phone at herself. Snap. Whenever I see someone taking a selfie, I get an awkward feeling of seeing something not meant to be seen, somewhere between opening the unlocked door of an occupied toilet and watching the blooper reel of a heavy-handed drama. It’s like peeking at the private preparation for a public performance.

In his film The Phantom of Liberty (1974), the director Luis Buñuel imagines a world where what should and shouldn’t be seen are inverted. In a ‘dinner party’, you shit in public around a table with your friends, but eat by yourself in a little room. The suggestion is that the difference between the public and the private might not be what you do but where you do it, and to what end. What we do in private is preparing for what we’ll do in public, so the former happens backstage whereas the latter plays out onstage.

Why do we so often feel compelled to ‘perform’ for an audience? The philosopher Alasdair MacIntyre suggests that narrating is a basic human need, not only to tell the tale of our lives but indeed to live them. When deciding how to read the news, for example, if I’m a millennial I follow current events on Facebook, and if I’m a banker I buy the Financial Times. But if the millennial buys the Financial Times and the banker contents himself with Facebook, then it seems the roles aren’t being played appropriately. We understand ourselves and others in terms of the characters we are and the stories we’re in. ‘The unity of a human life is the unity of a narrative quest,’ writes MacIntyre in After Virtue (1981).

We live by putting together a coherent narrative for others to understand. We are characters that design themselves, living in stories that are always being read by others. In this light, Morgan’s selfie is a sentence in the narration that makes up her life. Similarly, in Nausea (1938) Jean-Paul Sartre wrote: ‘a man is always a teller of stories, he lives surrounded by his stories and the stories of others, he sees everything that happens to him in terms of these stories and he tries to live his life as if he were recounting it’. Pics or it didn’t happen. But then he must have asked himself, sitting at the Café de Flore: Am I really going to tell someone about this cup of coffee I’m drinking now? Do I really recount everything I do, and do everything just to recount it? ‘You have to choose,’ he concluded, ‘to live or to tell.’ Either enjoy the coffee or post it to Instagram.

The philosopher Bernard Williams has the same worry about the idea of the narrated life. Whereas MacIntyre thinks the unity of real life is modelled after that of fictional life, Williams argues that the key difference between literature’s fictional characters and the ‘real characters’ that we actually are resides in the fact that fictional lives are complete from the outset whereas ours aren’t. In other words, fictional characters don’t have to decide their future. So, says Williams, MacIntyre forgets that, though we understand life backwards, we have to live it forwards. When confronted with a choice, we don’t stop to deliberate what outcome would best suit the narrative coherence of our stories. True: sometimes, we can think about what decision to make by considering the lifestyle we have led, but for that lifestyle to have come about in the first place we must have begun to live according to reasons more fundamental than the concerns of our public image. In fact, says Williams, living by reference to our ‘character’ would result in an inauthentic style different from that which had originally risen, just like when you try methodically to do something that you’ve always done naturally – it makes it harder. If you think about how to walk when you’re walking, you’ll end up tripping.

When Williams wrote this more than 10 years ago, there were fewer than half the selfies in the world than there are today. If he’s right, does that mean we’re trying to understand more about ourselves now? In a way, it does, but that’s because making sense of our lives backwards is actually necessary to carry them forwards. As the philosopher David Velleman says, human beings construct a public figure in order to make sense of their lives, not as a narration but as a readable image that they themselves can interpret as a subject with agency. Even Robinson Crusoe, isolated from any audience, would need to shape a presentation of himself so that he could keep track of his life. From this need for intelligibility stems the distinction between the private and the public spheres: in order to be intelligible, we must construct a self-presentation, and in order to do that we must select what of our lives we present and what we choose to keep private. So the private sphere is what we hide not because we deem it shameful but because we decide it doesn’t contribute to our self-presentation, and hence to our sense of agency. Morgan’s selfie, here, is just a manifestation of the basic human need for self-presentation.

The selfie epidemic, Velleman might say, is a consequence of the increase in the amount of tools for self-presentation – Facebook, Twitter and the like. That, taken together, shrinks the private sphere. If controlling what we show is an inevitable urge, social media is only an explosion of the means to satisfy it. On the face of this, Velleman thinks we could use a little more refraining, but he wrote this 14 years ago. Today, I imagine him ranting at the hashtags that colour people’s self-presentation (#wokeuplikethis, #instamood, #life, #me). But no matter: he himself said that everyone is entitled to expose and hide whatever they see fit.

Whether life should be seen as a narrative or not, living is about choosing what to present and what to keep to oneself. We must navigate between the two. And if what counts as public or private can vary among cultures or age groups, it can vary among individuals too, so whenever I catch my flatmates snapping a selfie, I will continue to feel just as awkward as if I’d accidentally caught them changing their underwear.

A version of this piece was published in the Spanish language magazine Nexos and was written by Emmanuel Ordóñez Angulo, a writer, filmmaker and graduate student in philosophy at University College London, where his research interests include the convergence of the philosophy of the mind and aesthetics.https://aeon.co/opinions/why-watching-people-take-selfies-feels-so-awkward