How we came to love our machines more than each other

A few years ago, I had a delicious discussion with Lavie Tidhar, a science fiction writer,

about the meaning of the word ‘future’. Was there, for the practical purpose of writing, a

point at which the future was clearly delineated from the present? How far into the future

need a writer travel to separate fragile fantasy from rigorous reality? For him, the future

is when things ‘start to get weird’. For me, it’s when fact becomes more fantastic than

fiction.

I was living in Australia when news flashed through that Kim Yoo-chul and Choi

Mi-sun, a couple who had met online, had allowed their baby daughter to starve to death.

They had become obsessed with raising an avatar child in a virtual world called Prius

Online — their virtual baby was apparently more satisfying than their real-life one.

According to police reports, the pair, both unemployed, left their real daughter at home,

alone, while they spent 12-hour sessions caring for their digital daughter, cutely named

Anima, from a cyber cafe in Seoul.

It’s easy to dismiss this story as being about people taking their love of computer

games too far. But this reading of the tale as a kind of teary technological fable could be

more misleading than we imagine. A more careful reading would see that it’s about

identity, purpose, and intimacy in an age of super-smart, emotionally programmed

machines. It is also about social interaction, addiction, displacement, and how some

people can only deal with so much reality. Most of all, it’s about how our ancient brains

are not well equipped to distinguish between real relationships and parasocial, or

imagined, ones.

Seoul itself is fascinating because it gives us an indication as to where the rest of

the world may be heading. Being the most wired city on Earth, it has a veneer of the

future, but deeper down it is stuck in the 1950s. The country has the fastest average

broadband speeds in the world, and there are plans for a 5G network that will make

things 1,000 times faster. On the other hand, you need a government-issued identity

number to use the internet in Starbucks, and censors cut out chunks of content from the

internet each week.

It’s possible that more governments will seek to limit what people can access

online, and that the open, participatory, and libertarian nature of the internet will be

tamed via censorship and regulation. But it’s equally possible that weakly regulated

companies will create virtual experiences so compelling that people withdraw from

meaningful relationships with other humans and no longer profitably participate in

society.

Some screen-based gambling machines are already designed to monitor how

people play and to deliberately attack human vulnerabilities so that users will gamble for

as long as possible. The aim, in industry parlance, is to make people play to extinction. In

the case of Kim Yoo-chul and Choi Mi-sun, this is exactly what happened.

More or less human

South Korea’s neighbour Japan is another country where the past competes head-on with

the future. Ancient cherry-blossom festivals exist alongside robots in kindergartens and

care homes. This automation fills the gaps where demographics, community, and

compassion have fallen short.

One such robot is Paro, a therapeutic care-bot in the form of a furry seal. The aim

is companionship, and the seal modifies its behaviour according to the nature of human

interaction.

Used alongside human care, Paro is a marvellous idea. There are tug robots that autonomously move heavy hospital trolleys around, too. These save hospital porters from back injuries, but being faceless and limbless they aren’t especially charismatic. They don’t smile or say hello to patients either, although they could be made to do so. Looked at this way, both are examples of technology reducing our humanity. What’s needed is not more efficiency-sparking electronics, but more human kindness and compassion. Sherry Turkle, a professor of the social studies of science and technology at MIT, believes that it’s dangerous to foster relationships with machines. She says there’s ‘no

upside to being socialised by a robot’. Vulnerable groups such as children and the elderly

will bond with a robot as if it were human, creating attachment and unreasonable

expectations. Her unsettling conclusion is that we ‘seem to have embraced a new kind of

delusion that accepts the simulation of compassion as sufficient’. The subtitle of her book

Alone Together says it all: why we expect more from technology and less from each other.

In some ways, creating a caring robot is more of an intellectual or scientific

challenge than a practical necessity. But if there are people who cannot relate to others,

who don’t mind or even prefer sharing life with a robot, perhaps there is some benefit, as

anyone who has watched the science fiction film Bicentennial Man might attest. Maybe

physical presence and human contact don’t matter, or they matter much less than we

currently think.

Eventually, we will create robots and other machines that become our close

friends by simulating reciprocity and personality. We will probably program fallibility,

too. And perhaps we won’t care that these traits aren’t authentic.

We do not currently seem to care that much of what we readily accept about other

people online is an edit of reality. The digital identities and narratives we weave rarely

include elements of fear, doubt, or vulnerability. We photoshop ourselves to appear

happier, prettier, and more successful than we really are. Because we favour pixelated

perfection, not analog ambiguity. (The daily news is a similar edit of reality, also opposed

to ambiguity, but tends to operate in the opposite direction, generally exaggerating human

misery and conflict while ignoring humility and happiness.)

But before we build emotional relationships with machines, shouldn’t we be

asking ourselves what we might be doing this for? Shouldn’t we be delving into debates

about what it means to be human, and then measure whether new technologies have a

positive or negative impact? Technology, after all, is an enabler, not an end in itself. This

doesn’t mean travelling backwards, however it does mean that technologists should sit

alongside philosophers, historians, and ethicists. We need wisdom alongside knowledge,

a moral code alongside the computer code.

Looking back at the origins of modern machines, the purpose of technology was

either to do something that humans couldn’t do or to remove human drudgery. The word

‘robot’ comes from the Czech word ‘robota’, meaning ‘forced labour’. Using machines to

replace people in dull or dangerous jobs is entirely reasonable. Using machines to

enhance human interactions and relationships is sensible, too. But I suspect that in many

instances, the aim nowadays is simply to reduce human costs, and we accept this because

we’re told that it’s efficient or because we’re given no choice.

As to what the human costs might be in a broad societal sense, this isn’t

accounted for. The dominant narrative of the early 21st century is therefore machine-

centric. It is machines — not people — that are revered, when surely it should be the

reverse.

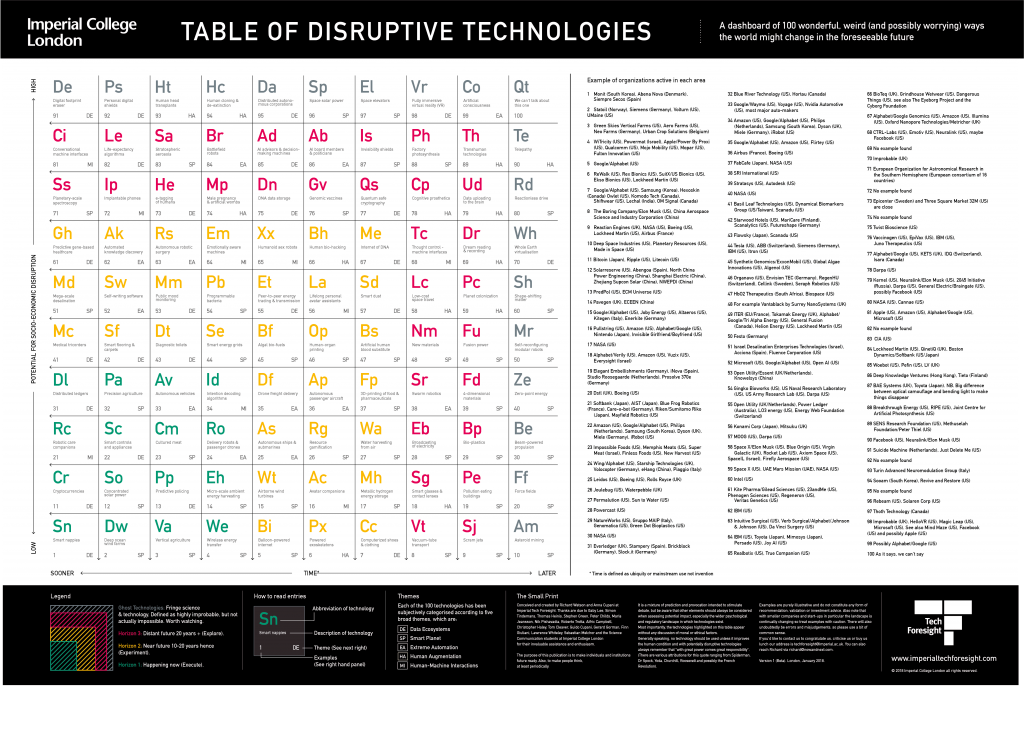

I was watching a BBC television programme recently, and there was a report

about a study by Oxford University, saying that around half of current occupational

categories may be lost to automation over the next 20 years. This won’t be a problem if

new and better jobs are created, which is what’s happened historically. The industrial

revolution destroyed many professions, but created others, increased wages, and ushered

in a new era of productivity and prosperity. Yet the internet could be doing the total

opposite. According to the computer scientist and author Jaron Lanier, the internet is

destroying more jobs and prosperity than it’s creating. Amazon might be one example of

such destruction; others can be found in industries ranging from music and photography

to newspapers and hotels.

What’s interesting is how fatalistic most people are about this. One person

interviewed by the BBC commented: ‘It’s just progress, I guess.’ But progress towards

what?

The illusion of progress

Looked at with a long lens, progress does appear to be on a sharp upward curve, yet this

is partly an illusion of perspective. Using a magnifying glass, the curve fragments into a

series of advances, frantic retreats, and further advances. Robert Gordon, a professor of

macro-economics at Northwestern University in America, claims that many of our so-

called revolutionary technologies are nothing of the sort. The impact of older

technologies such as running water, sewerage, electricity, automobiles, railways, postage stamps, and telephones was far greater than any of the digital technologies we have today. The internet, as technology writer Evgeny Morozov summarises, is ‘amazing in the same way a dishwasher is amazing’. One anonymous wag appears to agree, claiming San Francisco–based ‘tech culture is focused on solving one problem: what is my mother no longer doing for me?

By and large, digital technologies represent incremental change. Above all, they

are symbolic of our quest for convenience and efficiency.

Nevertheless, there are some who worship at the shrine of such progress. Martha

Lane Fox, a founder of lastminute.com, argues that anyone who is against the digital

revolution is a heretic. There are no excuses — everyone must be online. Those who

resist, regardless of age, must be given a ‘gentle nudge’ because being offline is ‘not

good enough’.

Fox appears to equate any digital resistance to that of the Luddites. But what she

might be missing — as Andrew Keen, author of The Internet is Not the Answer, points

out — is that while the internet does indeed liberate, inform, and empower, there’s a

danger of being fooled again. Our new boss is the same as our old boss: ‘The error that

evangelists make is to assume that the internet’s open, decentralised technology naturally

translates into a less hierarchical or unequal society.’

Moreover, what are the consequences if individuals no longer interact with other

human beings as much as they once did, and what might happen if people are replaced

with unseen machines in a growing number of roles and relationships?

Is it a problem, for instance, that four-year-old children are having therapy for

compulsive behaviour concerning iPhones, or that training potties can now be bought

with iPad stands? Would it be acceptable for robots to raise children or for a closure

avatar to be the last face that an individual sees before they die — and if not, why not?

Will we be forced to get used to such things, or will we fight back, redefining progress

and dismantling this dystopia with a simple screwdriver?

For me, the relationship and balance of power between humans and machines is a fundamental one for both current and future generations to figure out. So why is there so much silence? Perhaps it’s because most of us are tethered to mobile devices that constantly distract us and prevent us from thinking deeply about the impact of these technologies.

Information is now captured and disseminated 24/7, so there’s little time to clear

one’s head. Or perhaps we prefer to be busy, because slowing down and reflecting about

who we are and where we’re going — or whether we’re achieving anything of enduring

substance — is too terrifying to think about.

Me, my selfie, and I

I was in a coffee shop recently, although it was more of a temporary workspace and

substance-abuse centre than a cafe. Power cables were strewn across the floor, and almost

everyone in the cafe was on a mobile device — unable, it seemed, to be alone with their

own thoughts or without caffeine for more than 60 seconds. Peoples’ personal

communications appeared to be thwarting personal communication. These people clearly

had hundreds of digital friends, but they were absorbed with their own tiny screens in the

company of real people, all of whom had been abandoned.

The cafe was full, but people were texting, not talking. Consequently, there was

no noise. No chatter. No laughter. The atmosphere was both vacuous and urgent, which

was an odd combination.

Were these neurotically self-aware people really thinking? Were they feeling the

sensation of time slowly pressing against their skin? Not as far as I could tell. They were

writing pointless PowerPoint presentations, were glued to Facebook and Twitter, or were

sending desolate photographs of their over-sized biscuits to their acquaintances on

Snapchat and Instagram. Most seemed set on broadcast rather than receive, and were

engaged in what the informed and bewildered writer Christopher Lasch once described as

‘transcendental self-attention’.

This was the same month that Russian separatists had shot down a passenger

plane, Syria was soaked in blood, and Palestine and Israel were throwing retributions at

each other.

A while earlier, articles had appeared questioning the digital revolution that

wasn’t, especially the way in which the lethargy of productivity growth in the US

appeared to coincide with the widespread adoption of personal computing. As the

American economist Robert Solow commented, computers were everywhere except in

the numbers.

There was clearly much to discuss. I felt like standing up and shouting, ‘I’m mad

as hell, and I’m not going to take this anymore’, but the film reference would have been

lost among the ubiquitous network coverage.

Perhaps this inward focus explains how we are outraged by a South Korean

couple starving their daughter to death one minute and then forget about them the next.

Our memory erased by the mundane minutiae of our daily digital existence.

Another reason we might not be asking deep questions about why we’re here and

what we’re for — and how technology might fit into the equation — could be that many

of the people pushing these new communication technologies are on the autistic spectrum

and, paradoxically, have problems communicating with and relating to other people. As

the novelist Douglas Coupland comments in Microserfs: ‘I think all tech people are

slightly autistic.’ The reason that so many silicon dreamers want to lose contact with their

physical selves and escape into a shining silver future might be that, due to their

meticulous minds, their burdensome bodies have never been wanted. This dichotomy

between autistic technologists and everyone else is reminiscent of The Two Cultures by

C.P. Snow. A scientist and novelist himself, Snow described in 1959 how the split

between the exact sciences and the humanities was a major encumbrance to solving many

of the world’s most pressing problems.

Or perhaps the whole human race is becoming somewhat autistic, preferring to

live largely alone, interacting only reluctantly and awkwardly with others. One thing I

have certainly observed first-hand is the way in which an increasing number of people are

finding real-life interactions onerous — not only in Silicon Valley, Tokyo, and Seoul, but

elsewhere, too. Carbon-based bipeds have illogical needs, and our impulses can be a

source of irritation to others.

Human beings are therefore best dealt with through digital filters or avoided altogether. If you doubt this, and you have a spare teenager to hand, try phoning them without warning. This doesn’t always work — often because they won’t answer your call — but if you do get through, the immediacy of a phone call can be unsettling. A phone call is in real time. It demands an instant reaction and cannot be photoshopped or crowdsourced to ensure an optimum result. Better still, see what happens when the teenager’s phone is lost or withdrawn for 24 hours. It’s as though their identity has collapsed, which in some ways it has. As

British scientist, writer, and broadcaster Susan Greenfield points out, ‘Personal identity is

increasingly defined by the approbation of a virtual audience.’ Staying connected is also

about protection: when a teenager isn’t online, they are unable to manage what others are

saying about them. None of this is a criticism of younger people. It is merely to say that

they could be a harbinger of things to come — and according to some observers, the

American psychologist Susan Pinker for instance, technology is pushing humanity

towards crisis point.

Yet the success of mobiles and social media is no surprise given how difficult

we’ve made it for younger people to get together in the real world. Yes, there are

questions around exhibitionism, narcissism, and hatred online, and as George Zarkadakis,

a computer scientist and science writer, has shown, ‘social networks erode previous

social structures and reintroduce tribalism into our post-industrial societies’. But in my

experience, social networks also fulfil a basic need for friendship and human contact.

We’ve convinced ourselves that the real world is full of physical dangers,

especially for children. So while we protest about children being addicted to screens, we

are reluctant to let them out of our sight outside unless they are tethered to a mobile

device or wearing clothing that emits a tracking signal.

How long, one wonders, before parents program drones with cameras to hover

above their children’s heads when they are outside or on their own. In this context, it’s

easy to see how social media is a direct reaction to parental paranoia.

Of course, what we don’t realise is that incorrect assessments of risk might be

destroying the one thing we value above all else. The emotional shorthand of the digital

world could be diminishing human relationships, as might our lack of presence, even

when someone is sitting right next to us.

I, too, can feel uncomfortable in the presence of others, but a coffee shop full of

people that are physically in attendance yet mentally elsewhere is worse. As the

philosopher Alain de Botton has said, it’s not so much peoples’ absence nowadays that

hurts as much as peoples’ indifference to their absence.

With well over a billion people on Facebook, you might think that friendship

would be suffering from oversupply. But according to the US General Social Survey, it’s

not. Between 1985 and 2004, the average number of close friends (people you can really

rely on in a crisis) per person fell from three to two, while the number of people with no

such friends increased from 8 per cent to 23 per cent. This finding has been criticised, yet

other surveys have similarly linked rising internet use with increasing isolation.

Facebook may even be making people angry and frustrated, according to a 2013

study by Humboldt University’s Institute of Information Systems in Germany.

Meanwhile, a 2013 University of Michigan study suggests that Facebook might be

making people more envious of others and hence less happy.

There are now websites (e.g. rentafriend.com) that will find you a physical friend.

Such websites may have more to do with a desire for companionship than loneliness per

se. But if you factor in weakening community ties, ageing populations, and the rise of

people living alone, one does wonder whether Theodore Zeldin, the provocative Oxford

University thinker, might be right in suggesting that loneliness might be the single

biggest problem in the 21st century.

According to a recent Relate survey, 4.7 million people in the United Kingdom do

not have a close friend. A poll for the BBC found that 33 per cent of Britons, including

27 per cent of 18-to-24-year-olds felt ‘left behind’ by digital communications, while 85

per cent said they preferred face-to-face communication with friends and family.

Viktor Mayer-Schonberger, the author of Delete: the virtue of forgetting in the

digital age, says that our increasing desire to record every aspect of our lives is linked to

our declining number of close relationships, caused notably by falling fertility rates and

smaller households. Because we no longer have the context of traditional

intergenerational sharing, we are relying on digital files to preserve our memory and

ensure that we’re not forgotten.

The stability of these files is, of course, a problem. Vint Cerf, one of the early

pioneers of the internet, recently said that technology was moving so fast that data could

fall into a ‘black hole’ and one day become inaccessible. His advice was that we should

literally print out important photographs on paper if we wished to keep them.

Connected to our sense of such digital instability might be recent events ranging from WMD and Enron to GFC and Libor. Such scandals mean that we have lost faith in the ability of our leaders and institutions to tell us the truth or give us moral guidance. A few years ago, US social scientists suggested the collective trauma of 9/11 might create a sense of cooperation, but indications are that what happened is the reverse.

According to a study of 37,000 Americans over the period 1972–2012, trust in

others, including trust in government and the media, fell to an all-time low in 2012. A

Pew report, meanwhile, says that only 19 per cent of Millennials trust others, compared

with 31 per cent of Generation X and 40 per cent of Baby Boomers. The decline in trust

means that we no longer know whom or what to believe and end up turning inwards or

focusing on matters close to home.

Overall, the effect is that we end up with an atomised society where we crave

elements of stability, certainty, and fairness — but where the individual still reigns

supreme and is more or less left to their own devices.

Another example of declining real-life interaction is shopping. Many people now

try to avoid eye contact with checkout assistants because this requires a level of human

connection. Better to shop online, use self-scanning checkouts, or wait until checkouts

and cash registers eventually disappear, replaced by sensors on goods that automatically

remove money from your digital wallet as you exit the shop.

This could be convenient, but wouldn’t it make more sense if we thought of our

shopping as being someone else’s job, or of shops as being communities? Doing so

would mean giving others more recognition and respect.

As Jaron Lanier asks in Who Owns the Future?, ‘What should the role of “extra”

humans be if not everyone is still strictly needed?’ What happens to the people that end

up being surplus to the requirements of the 21st century? What do we ask of these people

who used to work in bookshops, record shops, and supermarkets? What is their purpose?

Current accounting principles mean that such people are primarily seen as costs

that can be reduced or removed. But for older people in particular, sales assistants might

be the only people that they talk to directly all week. Furthermore, getting rid of such

people can cost society far more than their salary if long-term unemployment affects

relationships, schooling, and health.

Are friends electric?

Personal relationships can be frustrating, too. In San Francisco, Cameron Yarbrough, a

couples therapist, comments that ‘People are coming home and getting on their

computers instead of having sex with their partners.’ This could be an early warning sign

of a decline in deep and meaningful relationships, according to Susan Greenfield, who

also says that we may be developing an aversion to sex because the act is too intimate: it

requires trust, self-confidence, and — above all — conversation.

In Japan, some men have now dispensed with other people altogether, preferring

to have a relationship with a digital girlfriend in games such as Nintendo’s LovePlus. A

survey by the Japanese Ministry of Health, Labour, and Welfare in 2010 found that 36

per cent of Japanese males aged 16–19 had no interest in sex — a figure that had doubled

in the space of 24 months. To what extent this might be due to digital alternatives is

unknown, but unless Japanese men face reality and show more of an interest in physical

connection, the Japanese population is expected to decline by as much as a third between

now and 2060.

Nicholas Eberstadt, a demographer at the American Enterprise Institute, claims

that Japan has ‘embraced voluntary mass childlessness’, with the result that Japan not

only has the fastest-ageing population in the world, but also has one of the lowest fertility

rates. This is especially the case in Tokyo, the world’s largest metropolis. Commentators

have linked the rise of digital partners to a sub-culture known as ‘otaku’, whose members

engage in broadly obsessive geek behaviour associated with fantasy themes.

In the opening pages of Future Files, I referenced another Japanese phenomenon,

called ‘hikikomori’, which roughly translates as ‘withdrawal’ and refers to mole-like

young men retreating into their bedrooms and rarely coming out. This can’t be good for

birth rates either, although in the Japanese case another culprit for low fertility and low

self-esteem might be economic circumstances: the Japanese economy has been in the

doldrums for decades.

In the 1960s, 70s, and 80s, young people in Japan could reasonably expect a better life than their parents. Job prospects were excellent, work was secure, and the future smelt good. I even have a book, buried in a bookshelf, called Japan, Inc. (worryingly, perhaps, located next to China, Inc.), about the domination of the Japanese economy and the resultant obliteration of US competiveness. But such optimism has gone, and many Japanese feel that they no longer have a future.

You might reasonably expect that the hyper-connected young in Japan and

elsewhere would have a strong sense of collective identity, even a level of global

synchronicity in emotions. That they would create a vision of a better tomorrow and then

fight for it. But instead of a global village, we’ve just got a village. Social media is

facilitating a narrowing, not a broadening, of focus.

Demonstrations and revolts do happen, and online campaigning organisations

such as GetUp and Avaaz have an impact, but it remains to be seen whether such protest

will change the direction of mainstream politics. Similarly, in the UK, the singer Jason

Williamson of the Sleaford Mods belts out intense invectives for the cornered working

classes, however most young eyes and ears seem to be elsewhere.

According to The Economist magazine, around 290 million 14-to-15-year-olds

globally are neither in education nor working — that’s around 25 per cent of the world’s

youth. In Spain, youth unemployment skyrocketed from 2008, spending years at over 50

per cent. But instead of a strong sense of collective action, we have individualism and

atomisation. Instead of revolutionary resolve, we have digital distraction. Instead of

discontent sparking direct action, it generally appears to be fuelling passivity.

This lethargy is summed up by an image on the internet of a young man sitting in

front of a computer in a suburban bedroom looking out of the window. The caption reads:

‘Reality. Worst game ever.’ This echoes a comment made by Palmer Luckey, the 23-

year-old inventor who sold Oculus Rift, a 3D virtual-reality headset, to Facebook for $2.3

billion in 2014. Palmer has said that virtual reality ‘is a way to escape the world into

something more fantastic’. This is a statement that’s both uplifting and terrifying.

Richard Eckersley, an Australian social commentator, describes young people as ‘the miners’ canaries of society, acutely vulnerable to the peculiar hazards of our times’. He notes that detachment, alcohol, drug abuse, and youth suicide are signs that the felt experience of the modern age lacks cohesion and meaning. Nicholas Carr, the author of The Glass Cage, makes a similar point: ‘Ours may be a time of material comfort and technological wonder, but it’s also a time of aimlessness and gloom.’ Maybe the two are correlated. Most peoples’ lives around the world have improved immeasurably over the last 50 years, but most improvements have been physical or material. As a result, life has become skewed. An imbalance has emerged between work and life, between individuals and community, between liberty and equality, between the economy and the environment, and between physical and mental health — the latter barely captured by traditional economic indicators.

The shock of the old

In the UK, half a million sick days were taken due to mental-health problems in 2009. By

2013, this had risen to a million. Similarly, in 1980, when anxiety disorder became a

formally recognised diagnosis, its US incidence was between 2 per cent and 4 per cent.

By 2014, this had increased to almost 20 per cent — that’s one in five Americans. And

the World Health Organisation forecasts that 25 per cent of people around the world will

suffer from a mental disorder during their lifetime. Why might this be the case?

One argument is that unhappiness is a result of self-indulgent navel gazing by

people who no longer face direct physical threats. There’s also the argument that we’re

increasingly diagnosing a perfectly natural human condition, or that commercial interests

have appropriated anxiety to sell us more things we don’t need. Why simply sell a

smartphone when you can be in the loneliness business, selling enduring bliss to people

seeking affirmation, validation, and self-esteem?

Digital fantasy and escape can therefore be seen as logical psychological

responses to societal imbalances, and especially to the sense of hopelessness caused by

stagnating economies, massive debt burdens, and ageing workforces that are reluctant to

retire. While Japan has been pessimistic since the 1990s, there’s an argument that Europe

is now heading in the same direction as slow economic growth converges with rising

government debt, a declining birth rate, and increased longevity.

Even in the US, that cradle of infinite optimism, some members of the Millennial

generation are losing faith in the future, believing that decline is inevitable and that living

standards enjoyed by their parents are unattainable. This is the polar opposite to the

boundless belief in the future that can still be found in enclaves such as Silicon Valley,

where there’s a zealous belief in the power of technology to change the world, even if the

technology ends up selling us the same product — convenience.

Yet thoughts of decline aren’t the preserve of the young. I was at a dinner

organised by a large firm of accountants not so long ago, when one of the partners

recounted a conversation he’d had with the mayor of a large coastal town in the UK. The

mayor’s biggest problem? ‘People come to my town to die, but they don’t.’

Over the next two decades, the number of people worldwide aged 65 and over

will nearly double. This could create economic stagnation and generational angst on a

scale we can’t comprehend. The author Fred Pearce has suggested that 50 per cent of the

people who have ever reached the age of 65 years of age throughout human history could

still be alive. This is a dramatic statement, but it might be true, and suggests that every

region except parts of Africa, the Middle East, and South Asia might be on course for

lower productivity and greater conservatism in the future.

This demographic deluge could stifle innovation, fuel pastoral nostalgia, and

exchange society’s twin obsessions of youth and sex for a growing interest in ageing and

death. It could also wreak havoc with savings and retirement because the longer we live,

the more money we need. In a bizarre twist, we’ll need life insurance not in case we die,

but in case we don’t.

Many pessimistic commentators have equated ageing populations with lower

productivity and growth rates, although we shouldn’t forget that expenditure on

healthcare still boosts the economy. Those aged 65 and over also hold most of the

world’s wealth, and they might be persuaded to part with some of it. Other positive news

includes the fact that older populations tend to be more peaceful (more on this later).

Let’s also not forget that globalisation, connectivity, and deregulation have lifted

several billion people out of poverty worldwide, and according to a report by the

accountancy firm Ernst & Young, several billion more will soon follow suit. One billion

Chinese alone could be middle class by 2030. These are all positive developments, but

the forces that are lifting living standards are also creating risks that are a threat to

continuing progress.

If we add to this the uncertainty caused by rapid technological change, political

upheaval, environmental damage, and the erosion of rules, roles, and responsibilities, it

looks like the Tofflers were right and that the future will just be one damn thing after

another. Anxiety will be a defining feature of the years ahead. One psychological

response to this is likely to be misty-eyed nostalgia, but there could also be dangerous attempts to go backwards economically or politically.

We are already seeing some right-wing groups, such as Golden Dawn in Greece,

favouring extreme solutions. These can be attractive because, as George Orwell reminds

us, fascism offers people struggle, danger, and possibly death. Socialism and capitalism,

in contrast, merely offer differing degrees of comfort and pleasure. The promotion of

localism as a solution to the excesses of globalisation can also be attractive, but while

cuddly on the outside, localists have an affinity similar to that of the fascists for closing

minds and borders. There’s nothing inherently wrong with localism, however taken to

extremes it can also lead to nationalism, protectionism, and xenophobia.

In 2014, some commentators drew anxious parallels with 1914. This was a little

far-fetched, yet there are curious similarities, not only with 1914, but also with 1939.

These include: rising nationalism in politics, the printing of money, inflationary pressure,

politicised debt, challenges to reserve currencies, the fraying of globalisation, the

expansion of armed forces, finger-pointing toward religious minorities, and last but not

least widespread complacency.

It might be a logical leap, but I noted in Future Files that the top five grossing

movies back in 2005 were all escapist fantasies. Was this an early sign that reality was

getting too much for people even back then? Fast-forward to the present day, and

dystopias and fantasy still dominate the box office, often featuring werewolves, vampires,

and zombies. The latter, according to the author Margaret Atwood, are alluring because

they have ‘no past, no future, no brain, no pain’. At the same time, there’s also a new

trend for films containing fewer people or almost none at all, including a film called Her,

set in 2025, about an emotional relationship between a human being and a computer

operating system.

We shouldn’t read too much into these films, because they are just entertainment.

On the other hand, films — especially those set in the future — are usually commentaries

on contemporary concerns, particularly regarding technologies we don’t understand or

can’t control. Most monsters are metaphors. Modern Times, released in 1936, was about a

character trying to come to terms with a rapidly industrialising society where the speed of

change and level of automation were unsettling. Plus ca change, plus c’est la meme

chose, except that the current surfeit of digital wonders and computer-generated imagery

could be desensitising us to the wonders of the real world and, counterintuitively, curbing

our imaginations.

Our new surveillance culture

So have we invented any new fears of late? Is it the warp speed of geopolitical change

that’s unsettling us? Is technology creating a new form of digital disorder that’s

disorientating or is it something else? Do we still fear our machines, or do we now want

to become part of them by welcoming them into our minds and bodies? I will expand on

digitalised humans later in the book, but for now I would like to discuss reality, how we

experience it, and how we’re changing it.

Before computers, globalisation, deregulation, multiculturalism, and

postmodernism, we had a reasonably clear view of who we were and what we were not.

Now it’s more complicated. Increasing migration means that many people, myself

included, are not quite sure where they’re from, belonging neither to the place they grew

up nor the place they ended up. Rising immigration is also challenging our ideas about

collective and national identity because some historical majorities are becoming

minorities.

Similarly, what was once science fiction has become science fact, although

sometimes it’s hard to tell the difference. Amazon’s much publicised plan for parcel-

delivery drones is a good example. Was this just smart PR or could it actually happen? If

enough people want it to happen, it probably will.

The smartphone, which started to outsell PCs globally back in 2012, has been

hugely important in changing our external environment, although it now looks as though

phones may soon give way to wearable devices. This in turn may usher in the era of

augmented reality and the Internet of Things, where most objects of importance will be

connected to the internet or have a digital twin. If something can be digitised, it will be.

Wearable computing and smart sensors could also mean datafication, whereby

elements of everyday life that were previously hidden, or largely unobservable, are

transformed into data, new asset classes, and — in most instances — money.

Embedded sensors and connectivity mean that many physical items will be linked

to information, while information will, in some cases, be represented by real objects or even smells. Incoming information such as messages are currently announced via sounds (pings, beeps, music, etc.), but could be represented in the form of glowing objects such as necklaces or even scents emitted from clothes or jewellery. If you are wearing augmented-reality glasses, a message from your boss at work could even be heralded by a small troop of tiny pink elephants charging across your desk.

On the other hand, using an augmented-reality device as a teleprompter in

relationships or customer-service roles could render sincerity redundant. Individuals

wouldn’t know whether you really knew or cared about someone or not. Honesty,

authenticity, and even truth, so essential for human communication, could all be in

jeopardy. The implication here is that the distinction between the physical and the digital

(the real and the virtual worlds of Kim Yoo-chul and Choi Mi-sun) will blur —

everything will become a continuum. Similarly, I’d expect spheres of public and private

data to become equally confused.

An example of this might be the Twitter stream of one of my son’s teachers, who

tweeted that she was ‘sinking pints and generally getting legless’ prior to starting her new

job. You might argue that I shouldn’t have been following her tweets — but then one

might argue that she should have been more careful about what she was saying on a

public forum.

But back to how we are changing physical reality. If you’re not familiar with

augmented reality, it is, broadly, the overlaying of information or data (sounds, videos,

images, or text) over the real world to make daily life more convenient or more

interesting. Then again, perhaps the word ‘augment’ is misleading. What we’re doing is

not augmenting reality, but changing it. Most notably, we are already dissolving the

supposedly hard distinction between what’s real and what’s not real, and in so doing

changing ourselves and, possibly, human nature.

Most people would argue that human nature has been fixed for thousands of years. This is probably true. Yet the reason for this could be that our external environment has been fairly constant until now. I’m well aware of the argument that we’ve used technology to augment reality for millennia — and that once we’ve shaped our tools, our tools shape us — but the difference this time is surely ubiquity, scale, and impact. If wearables do become as alluring as smartphones, this may result in many people abandoning real life altogether. It may become increasingly difficult to exist

without such technologies. But this is only the beginning, potentially.

We may start by downloading our dreams and move on to attempts to upload

experiences. Such things are a long way off, maybe even impossible. Yet we’re already

close to the prospect of sticking virtual-reality goggles on our heads to trick our minds

into thinking something is happening when it’s not, and I’m sure implants can’t be far

off.

The idea of figuring out what human consciousness is, replicating it, and then

uploading it (i.e. ourselves) into machines, thereby achieving a kind of immortality, is the

stuff of science fiction. But some people believe it could one day become science fact,

allowing ‘us’ to be transmitted into deep space at the speed of light, where we might

bump into — travelling as packets of data in the opposite direction — aliens or even God.

(Why can’t aliens — or God — be digital? Why do we always assume that intelligent

aliens would have physical form, usually bipedal?)

In the meantime, don’t forget that while corporations may one day anticipate our

every need and personalise our experience of reality, governments could be doing

something more sinister, such as erasing our memories or implanting false ones, thereby

changing reality in another direction.

The things to worry about here are individual freedom, mental privacy, and self-

determination, especially what happens to the private self if it is constantly spied upon.

It’s possible that we’ll trade the idea of governments gathering information about us in

return for the promise of security, in which case privacy will become collateral damage.

But with companies, it could be different. At the moment, we seem happy to trade

privacy for convenience or personalisation, yet where is the line legally and ethically? Is

it right for Apple, Google, or Facebook to have unhindered access to our personal data or

to know more about us than national security agencies?

Is it right, as the writer Bruce Schneier suggests, that ‘The primary business

model of the internet is built on mass surveillance’? Or that, if something is free on the

internet, you are probably the product?

Flying further into the future, what if companies used remote brain scans to intercept our thinking or predict our actions? Remote brain scanning could be impossible, but Facebook is doing a good job of mind-reading already. No wonder the WikiLeaks co- founder Julian Assange described Facebook as ‘the greatest spying machine the world has ever seen’.

CCTV cameras, along with phone intercepts, are largely accepted as a fact of life

nowadays, but what if everything you say and do were captured and kept for posterity,

too? How might you live your life differently if you knew that everything you did might

one day be searchable? Radical transparency could fundamentally change our behaviour.

What, for instance, happens to intimacy when there are no secrets and everything is made

public? What happens to a person’s individual identity?

And forget Big Brother — we’re the ones with the cameras. We live in an era of

mass surveillance, but the lenses are focused on ourselves, and the trend is towards self-

disclosure and selfie-centred activity. In the UK, for example, almost half of the photos

taken by 14-to-21-year-olds and uploaded to Instagram are selfies. I’m not saying that

Orwell was wrong, however it might turn out that Aldous Huxley was more right.

Ray Bradbury may have been slightly off target, too: you don’t need to burn

books if nobody reads them any longer. Open discussion in pursuit of truth is irrelevant if

it’s watered down to the point of invisibility by a deluge of digital trivialities.

Transparency is a force for good. It can expose wrongdoing, promote cooperation,

uncover threats, and level the playing field in terms of the distribution of economic power

in society. But too much transparency, along with near-infinite storage capacity, could

turn us into sheep. Peer pressure could become networked, and conformity and

conservatism could result. Hopefully, new rules and rituals will emerge to stop people

observing what other people don’t want them to see.

We may also come to realise that certain things are best left unsaid or at least

unrecorded.

Perfect remembering

I’d imagine that for some people, wearables and implanted devices would enable a kind

of sixth-sense computing. Individuals will record and publish almost everything from

birth to death, and the resultant ‘life content’ will be searchable and used to intuit various

needs and to fine-tune efficient living.

It will also enable people to become intimately acquainted with those who died

before they were born. For example, gravestones might allow interaction so that family

members, friends, and other interested parties would be able to find out details about the

lives of the deceased. It may even be possible for algorithms to combine ‘life content’

with brain data and genetics to create holograms of dead people that would respond to

questions.

Of course, some people will choose to turn these recording technologies off or use

them with restraint. Such individuals will understand the need for boundaries surrounding

the use of technology and will appreciate that not everything in life needs to be measured,

augmented, or kept forever for it to be worthwhile.

Their beliefs would probably be an anathema in South Korea, where apps such as

Between already allow lovers to record every significant moment in their lives, along

with the duration of their relationships. This doesn’t currently include the ability to track

how many other people your partner has looked at each day, but if eye tracking and facial

recognition become commonplace, there’s no reason to suppose that it won’t be added.

Whether or not a significant ‘tech-no’ movement will ever emerge, I’m unsure. I

can imagine an eventual backlash against Big Tech, as occurred with Big Tobacco, but I

think it’s rather doubtful — although if you talk privately to people of a certain age, it’s

surprising how many would find it a relief if the internet was turned off at weekends or

disappeared altogether. What we’re more likely to see is a rebalancing. Certain places

will limit what you can and can’t do in them, and Screen-free Sundays at home or Tech-

free Tuesdays at work may appear.

We might see cafes or churches using special wallpaper or paint to block mobile

signals. There was a case recently of a person who responded to news that a friend had

died with the text ‘RIP INNIT’. This person may soon be forwarded straight to hell —

which for them might be an eternity in Starbucks with no mobile reception.

There’s also an emerging trend where people who spend all day looking at

screens or communicating with people remotely want to engage physically with other

people or use their hands to make things when they’re not working. I met an accountant

recently who had taken up making fishing flies by hand because this was, in his words,

‘an antidote to the world of computer screens and numbers’. There’s even a spark of

interest in chopping and stacking your own firewood, although I suspect this has more to

do with a crisis of masculinity than an attempt at digital detoxing.

But assuming, for a moment, that we don’t significantly switch things off, what

might a hyper-connected world look like 20 or 30 years hence? More specifically, how

might our identities change if we never switch off from the digital realm and are never

allowed to escape into a private sphere?

Early indications are that a significant number of people are finding two-

dimensional simulations of life more captivating and alluring than five-dimensional

reality, and that while collaboration and sharing are on the increase, compassion,

humility, and tolerance are not. Why might this be so?

One reason could be chemistry. Sending and receiving messages, manipulating

virtual worlds, and gamifying our everyday lives can result in blasts of dopamine, a

neurochemical released in measured doses by nerve cells in our brains to make us feel

excited, rewarding certain types of behaviour. Online friendship has not been described

as the crack cocaine of the digital age for nothing: even the tiniest text message,

flirtatious email, or status update results in a small jolt of pleasure — or what animal

behaviourists call ‘operant conditioning’.

There is some good news on the horizon, however. David Brooks, writing in The

New York Times, posed the question of whether technological change can cause a

fundamental shift in what people value. To date, the industrial economy has meant that

people have developed a materialist mindset whereby income and material wellbeing

become synonymous with quality of life. But in a post-industrial or knowledge economy,

where the marginal cost of digital products and services is practically zero, people are

starting to realise that they can improve their quality of life immeasurably without

increasing their income. This is a radical thought, not least because many of the new

activities that create quality of life or happiness do not directly produce economic activity

or jobs in the conventional sense.

It could be argued, for example, that Facebook creates happiness — but Facebook employs very few people. It is in the self-actualisation business, and allows people to express themselves themselves. In the past, great fortunes were made by making things that people wanted.

Making people want themselves might make the great fortunes of the future. Moreover, if

human happiness is fundamentally derived from helping other people then the new

philosophy of online sharing and collaboration could herald the dawn of a new age of

fulfilment.

Regardless of material possessions, it seems that what most people want from life

is straightforward. They want connection, support, and respect from family and friends.

They want purposeful work, enough money, freedom from violence and abuse, and a

community that cares for everyone. People also want a shared vision of where society is

heading, and to be part of something meaningful that is larger than themselves — this

could be family, an organisation, a nation, a belief, or an idea.

In the case of Kim Yoo-chul and Choi Mi-sun, work was absent, but arguably so

too was everything else.

Our environment has changed an awful lot since the Stone Age, while our neural

hardwiring has not. One major consequence of this is that we still crave the proximity,

attention, and love of other human beings. This could change, but currently it’s what

marks us out as being different to our machines. We should never forget this — no matter

how convenient, efficient, or alluring our machines become.

[Future Flash 1 — ‘Digital’]

Dear LifeStory user # 3,229,665 Daily data for Friday, August 12, 2039

Devices currently embedded: 6

Digital double status: OK (next payment due September 1, 2039) Today’s time online: 18.4 hours

Data uploaded: 67,207 items

Data downloaded: 11,297 items

Images shared: 1,107

Images taken without consent (automatically removed): 4,307 Micro-credits earned: 186 @ $0.00001

Nudges: 144

Good citizen points: 4

Nike points: 11

Pizza Planet pizza points: 18

Coke Rewards: 4

Outstanding infringement notices: 1

Contact with people of moderate risk: 1

Home security: FUNCTIONING

Anxiety alert level: MODERATE

Vital signs: NORMAL

Water quality: GOOD (supply constraints forecast)

Air quality level (average): 5

Flagged foods: 45

Calories consumed: 1,899

Calories burnt: 489

Total steps taken: 3,349

Energy inputted into local network: -112

CO2 emissions (12 month average): 22.8 tonnes

Non-neutral transport: 14 km

Travel advisory: recommend route B12

Automatic appointments confirmed: 8

Goods approaching wear out date: 16

Personal network size (average): 13,406

Eye contact with people in close network: 3

Eye contact with people not in network: 2

Individuals to be deleted: 4

Automated greetings sent: 87

Current relationship status: single, tier 4

People currently attracted to you: 2

Pupil dilation score (daytime) 12

Pupil dilation score (evening): 0

Last coupling: 43 days

Coupling agreements signed: 3

Attention level: 4

Learning level: BRONZE Lessons learnt: 0

Current life expiry estimate: January 11, 2064

Note: all information contained in this update remains the property of LifeStory and cannot be shared or used commercially without the prior permission of the copyright holder. Failure to maintain monthly payments puts your entire data history at risk.