Unconventional wisdom

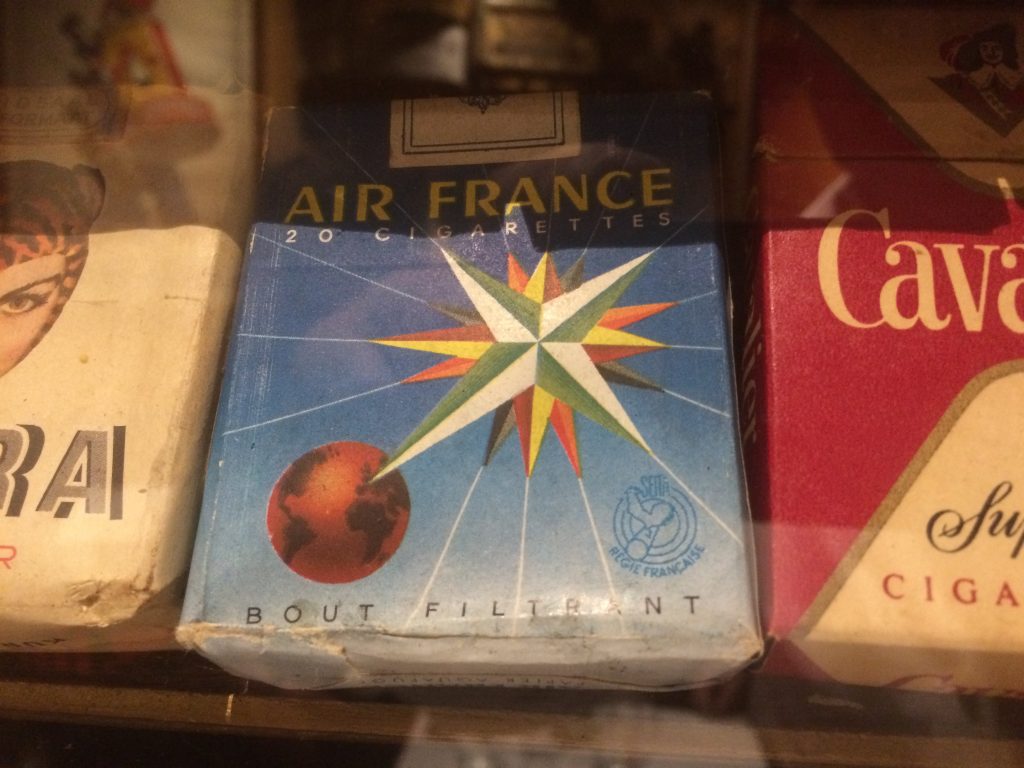

Spotted in Paris last week.

Trust used to be what most companies were selling. It was built up, consistently, over many years and once acquired, was the ultimate form of IP and scalable asset.

Nowadays most major companies have the opposite problem and so do many of our institutions. Nobody trusts them any longer. This is true of the entire financial services industry, all but a handful of politicians, most journalists, the police, the church, a number of scientists and just about any global multinational you care to mention.

In theory, the internet should be able to solve this problem. Millions of online voices rate their satisfaction with just about everything that matters. But a while ago Amazon ran into trouble because it discovered that lots of user reviews on its site were untrustworthy. They were written anonymously, often by someone related to someone trying to sell something, eg, an author selling a book.

Or take Instagram, part of Facebook, (don’t even mention them). A while back Instagram announced that all the photographs that users had entrusted them with now belonged to the company and the company would be selling as many of them as they could for a profit.

So what’s to be done? On one hand it’s a serious crisis of confidence in both capitalism and democracy. On the other hand, perhaps we are missing the power of feedback loops and cycles. If and when something tips too far in one direction, this creates an opportunity for someone, or something, to move off in the other direction.

Perhaps someone will eventually devise a way of getting everyone on the planet to rate everything – a global reputation index if you like. If someone refuses to take part this will indicate they have something serious to hide. (Sounds like a mix between Facebook and an episode of Black Mirror). But surely this is a new form of sanctimonious conformity? No. What we need here is not more information, but less. Information is the problem, not the solution. There is too much of it to analyse properly and we no longer trust anyone to filter or analyse anything for us. (Information Overload meets Filter Failure).

Perhaps we need a handful of media to become truly independent and trusted and to filter what’s relevant and place it in a proper context too. Either way, provenance is on the rise. (See Explainable AI for a digital version of provenance).

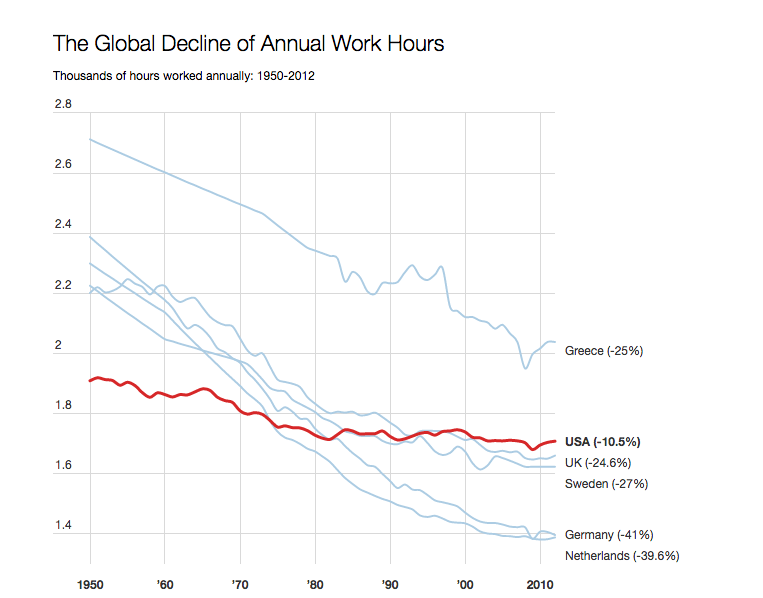

I heard recently that there’s more gold in mobile phones than in the earth. I checked this out and it appears to be nonsense. However, I did discover this….

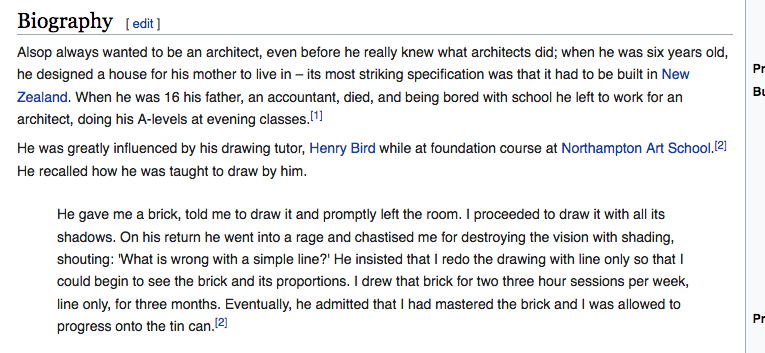

This reminds me of John Cage citing the Zen koan: “If something is boring after 2 minutes, try if for 4. Then 16. Then 32. Eventually one discovers that it is not boring at all.

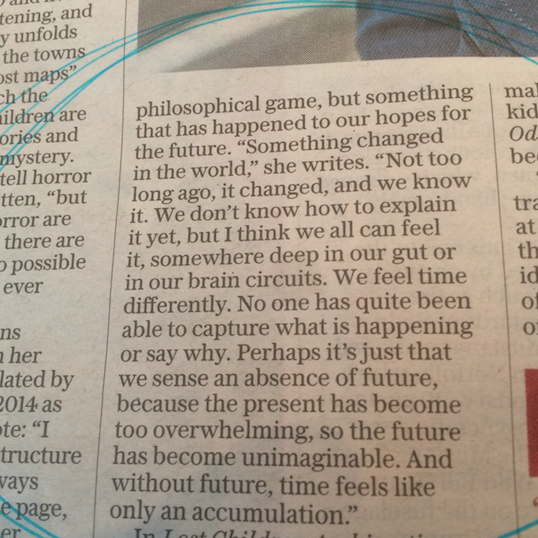

You’re going to ask who said this aren’t you? I’ve lost the name. South American writer. Female. Contemporary. Anyway, she nails it. I think it’s a mixture of everything happening too fast (the future arriving all at once if you like) plus a lack of historical anchor points and then a lack of future vision or narrative. It’s like we’re all chasing ghost cats in thick fog in the middle of a zombie apocalyse.

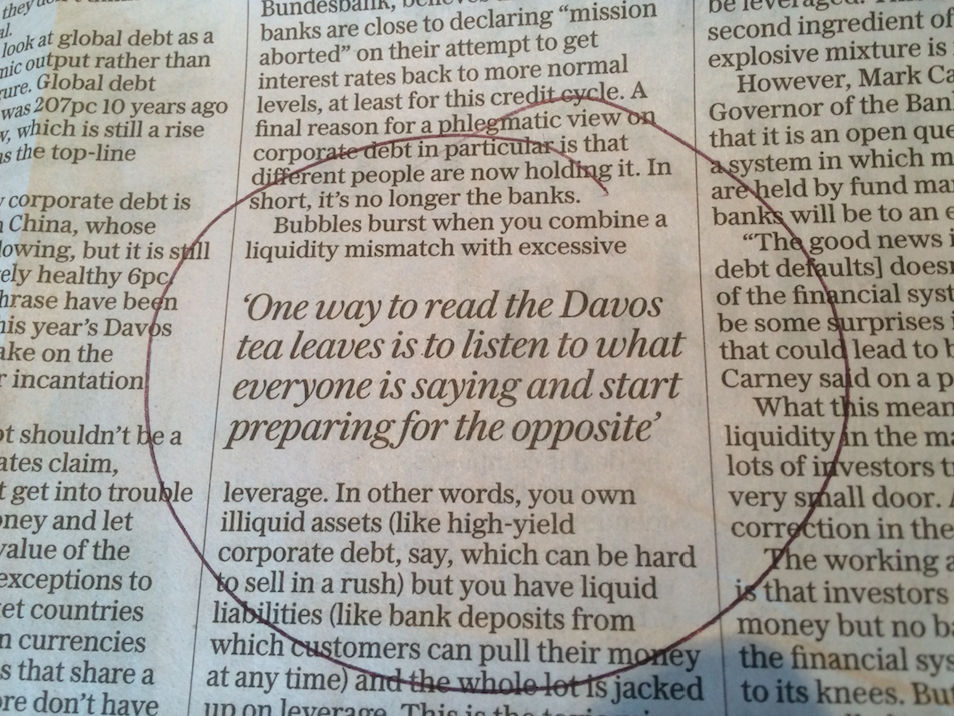

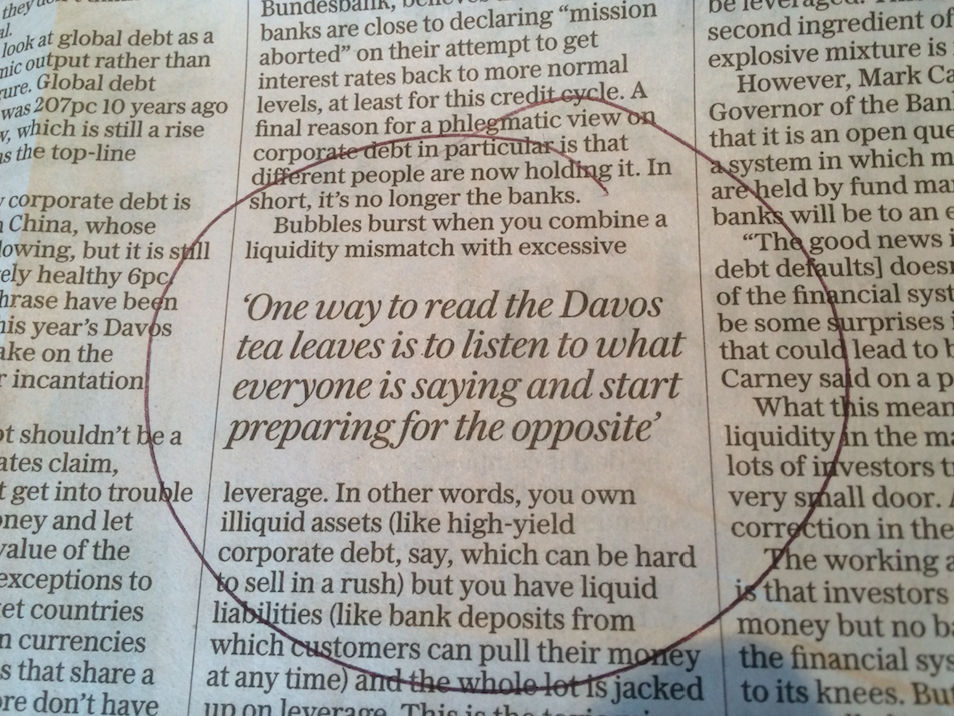

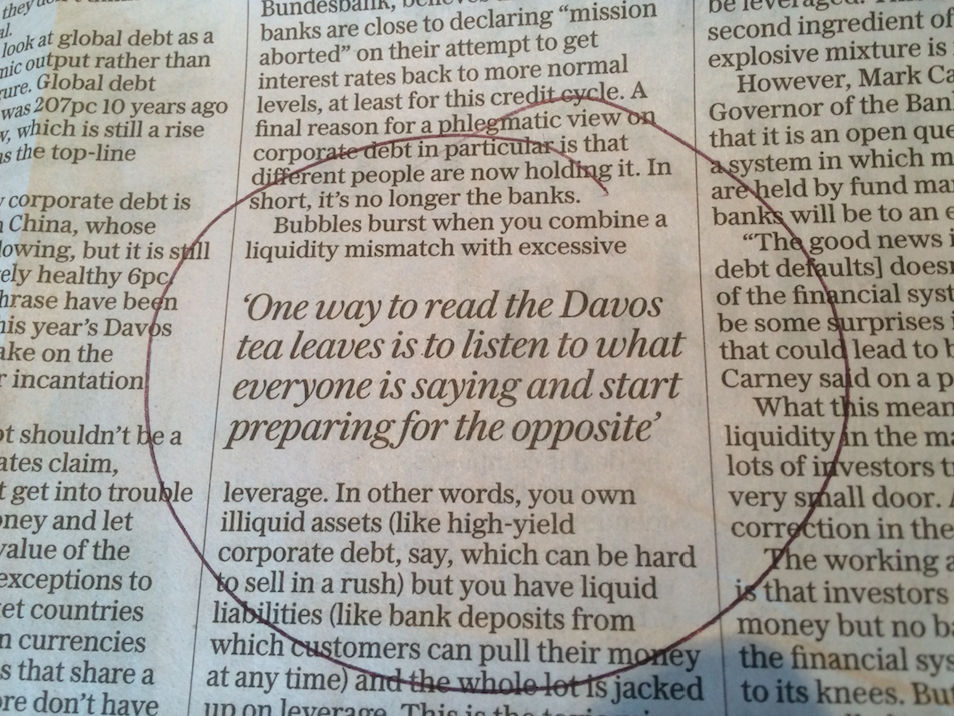

I just posted a link to this article in the comments on my previous post, but it’s worth repeating here as it’s so good. Thanks to Kevin for this btw.

One passage to kick off…

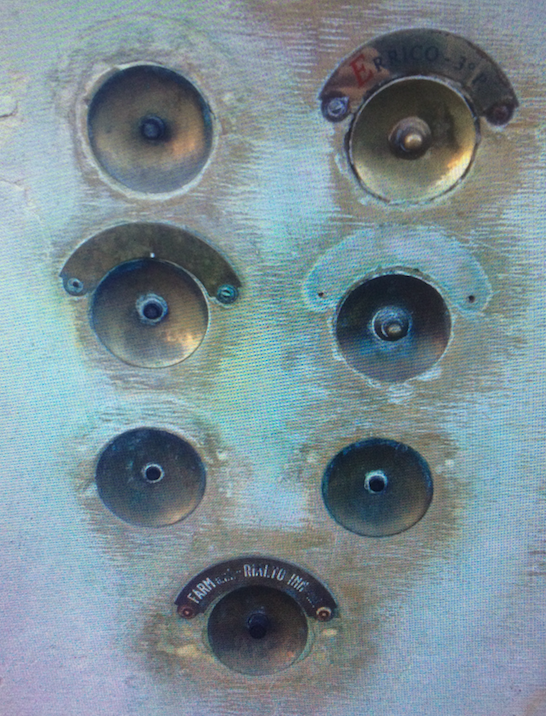

Pallasmaa, in his The Eyes of the Skin, noted that touch is a key part of remembering and understanding, that “tactile sense connects us with time and tradition: through impressions of touch we shake the hands of countless generations”. Is this reach for the switch merely functional, then? A light switch can stick around for decades, as with the doorhandle. When you touch the switch, you are subconsciously sensing the presence of others who have done so before you, and all those yet to do so. You are also directly touching infrastructure, the network of cables twisting out from our houses, from the writhing wires under our fingertips to the thicker fibres of cables, like limbs wrapped around each other, out into the countryside, into the National Grid.

Is it just me or are people getting a little fed up with constantly being ask to rate everything or press stupid smiley buttons? It’s one thing to occasionally be asked whether your food was cooked correctly, but do we really need to press a button to say how pleased we were with our airport security experience? It’s endless: Uber drivers (always 5 stars on the basis they’ll do the same and award us 5 stars), eBay, Amazon…

What’s next? a row of smiley buttons to say how, overall, you rated your life just before you take your last breath on a hospital bed?