There’s a huge amount of nonsense out there about AI. This is a great intro on what’s going on and what’s not. I’ll post some more if I find more worth watching.

Category Archives: AI

What can’t AI do?

Oh, my goodness. I’ve started something looking at what AI can’t do and it’s turning into a real can of worms. I thought it might be simple: AI is fairly useless at creativity, not great with empathy (AI isn’t a “people person”) and maybe throw in common sense and perhaps leadership. But everything I dig into throws up more questions than it answers and everything is more or less contestable.

Monday statistic

Last year the number of students taking a creative arts exam in the UK fell by 51,000. Arts subjects, including design, drama and art, now account for only 1 in 12 GCSEs. Four years ago it was 1 in 8. A national scandal.

If we want our children – and our children’s children – to compete with machines that can think, I agree with Lucy Noble, Artistic & Commercial Director of the Royal Albert Hall, that an arts subject should be compulsory at GCSE, although I’d add philosophy to the list of compulsory subjects too.

Explainable AI

I sometimes get asked how I look at things, especially in the sense of how do I know what to notice and what to ignore. My glib answer is often the rule of 3. If 3 people mention the same thing, or I see 3 examples of something in different contexts, I tend to pay attention.

A good example is Explainable AI. Early this year a coder mentioned an idea for what he called ‘software that rusts’. For some unexplainable reason this instantly grabbed my attention. It was somewhat illogical and possibly contradictory, but there was something in the idea. Digital is pristine and identical. But humans like imperfection and uniqueness.

Last week I was taking with some students at the Dyson Lab at Imperial College and we got talking about AI to AI interactions and I came up with the idea of Digital Provenance. This would be a bit like Blockchain, in the sense that you could see the history of something that was digital, but it would have a far richer and more human storyline. In other words, digital products would be able to reveal where they were coded, but also when? and by whom? In other words, the idea of provenance or ‘farm to fork’ eating transferred to software code or anything that was digital.

Then the day before yesterday I was with some people and the concept of Explainable AI came up. The best way of thinking about this might to think in terms of a black box that can be opened up. I think this will become increasingly important as and when accidents happen with AI and fully autonomous systems. These machines need to explain themselves to us. They need to be able to argue with us over what they did and why and reveal their biases if asked. At the moment most of these AI systems are secret and neither users, regulators or governments can look inside. But if we start trusting our lives with these systems then this has to change.

BTW, since I’m getting into AI, I’d like to highlight a problem that’s been around for centuries – human stupidity. In a sense, the issue going forward isn’t artificial intelligence, it’s real human stupidity. In particular, the human stupidity caused by an overreliance on machines. As Sherry Turkle once said, “what if one of the consequences of machines that think, is people that don’t?” There is a real danger of a culture of learned incompetence and human de-skilling arising from our use of smart machines.

Silly example: I was at London Bridge Station earlier in the week trying to get on the Jubilee Line. The escalators were broken. The queues were horrific. So, I asked why we couldn’t use the escalators. “Because they’re broken” was the response. “But they are steps” I replied. “They still work.” OMG.

Extractivism

Read this. It’s interesting.

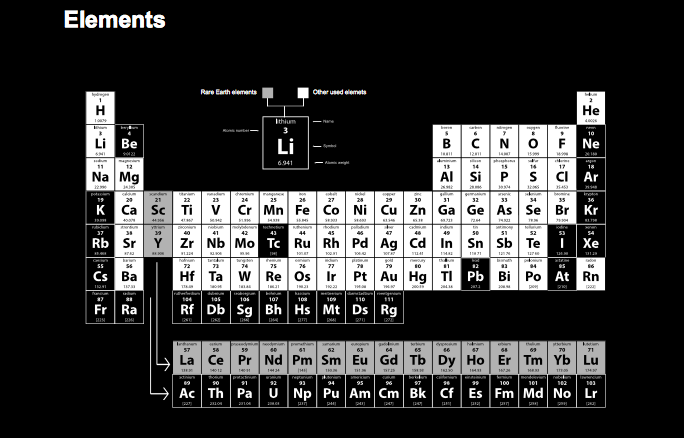

Visual of the Week

Thanks to Kevin for this! Click here for a closer look…

Thanks to Kevin for this! Click here for a closer look…

Human Made

I was having a coffee with editor of Wired magazine a few days ago and we got onto the subject of ‘human’ being a trend in the future. He said that the founder of Net-a-Porter said that in the future we will buy clothes with labels saying “Human-Made”. I agree.

As AI, robotics and automation grow the balancing trend (or countertrend if you prefer this term) will be an emphasis on human hands, human production, human craft, human imperfection and human vs machine intelligence and so on. We might even get to a situation where people buy human-made vs AI made in the same way we currently buy organic vs non-organic food.

So, for instance, we might see burger bars with signage saying ‘human made food’, books saying ‘human written’ or computers with labels saying ‘human designed on the outside, AI on the inside’. At the very extreme we might eventually witness an artificial-authentic divide in the human population, whereby we have the original, organic, human species and a generically augmented and machine-enhanced, hybrid species.

As an aside, I was talking with some students at the Dyson Lab today about AI to AI interactions and one idea that came up was that of ‘digital provenance’. So, for example, we might be able to ask where the code for a product has come from (when it was written by whom and where) much in the same way that food products currently display information about who, where and when they were made. Maybe code will have a ‘best before’ date and maybe we’ll ask algorithms to reveal their biases (a bit like Blockchain but with more narrative quality).

BTW, two spin-offs of possible importance here. Firstly, the difference between artificial and sythetic and, secondly, how do you know when something is a synthetic construct that mimics a human behaviour or human production, which is to say how do we really know something is real? (Thanks Nik).

Totally Random

AI ‘Watson’

I’ve been attending a conference called the Singularity Summit: Imagination, Memory, Consciousness, Agency and Values, part of the AI and Humanity series at Jesus College Cambridge. One of the great things about going to Jesus College is you can stand in the taxi queue outside Cambridge station and shout the immortal line: “Is anyone else waiting for Jesus?”. Anyway, a wonderful two days. A few standout quotes below. BTW, one thing that really stood out for me talking to people that work in serious AI research is the degree to which they are almost anti-tech. They don’t use computers outside of work, they have dumb phones or none at all, they aren’t on social media, they avoid digital media and they like to read and write using paper (“they (digital products) scatter your brain.”).

“Experts on X are rarely experts on the future of X.”

“There is no definition of intelligence that isn’t political.”

“The robots won’t take over because they couldn’t care less.”

“The fear of the arts and humanities people is that there isn’t anything unique about humans…the fear of the (AI) scientists and engineers is that there is.”

“Rapid change makes the future harder or even impossible to predict”

“The human mind cannot merely be a matter of matter”

“Experience drives perception.”

“Humans need comfort…they need to feel safe.”

“Machines that can substitute for intelligence.”

“Thoughts change with bio-physical change.”

“Humans develop over time. Machines don’t.” *

“The fantasy (fetish?) of the asocial person.”

“Is it wrong to steal from a robot?”

“Machines that are made to suffer”

“AI is a corporate idea” (i.e. born of the military-industrial complex)

(The world is being designed by) “WEIRD people (Western, Educated, Industrialised Rich, Democratic” (to which I might add people on the spectrum).

(Perhaps we develop) “a nurturing process for robots” **

* Links to a lovely idea – software that rusts.

** Would contradict machines not developing over time. (i.e. we release robots – or embodied AIs – into the world to learn over an extended period in much the same way that humans do. It might take a (human) lifetime, but you may only have to do it once.

Can you teach a computer to be curious?

Good question right? But be careful, reinforcement learning isn’t necessarily the same thing as curiousity to my mind. Also, I think curiousity is on a spectrum. Sure you can build carrot/stick and right/wrong motivations into a machine, but can you build a real passion for serendipitous and perhaps illogical and inconvenient encounters? Is curiousity just an alertness to pattern breaking or is it a fundamental desire to know that cannot be taught to people let alone machines?

I guess we’ll find out sooner or later.

Three good articles on the subject.