A Cambridge (UK) scientist has developed a prototype computer that can ‘read’ users’ minds by capturing and then interpreting facial expressions – such as concentration, anger or confusion. In experiments using actors the computer was accurate 85% of the time although this dropped to 65% with others. The technology raises a number of privacy-related issues, not least the collection of highly-sensitive personal data. Toyota is allegedly already working with Professor Peter Robertson, the inventor, to link the emotional state of drivers with various safety controls and mood sensitive features in cars. Other customers might include insurance companies wanting to crack down on dishonest claims, banks targeting identity fraud, teachers trying to teach (does the student really understand?) or governments wanting to identify terrorists or social security cheats. In the future, car companies or local councils could even tailor road maps or directional signage to the level of aggression of individual drivers. What intrigues me most, however, is whether you could link mood sensitivity to products like radios and televisions so that they tune into ‘happy’ music or programs. There is also the fascinating possibility of online retailers tailoring their home pages, product offerings and even product descriptions to the emotional state of their customers.

A Cambridge (UK) scientist has developed a prototype computer that can ‘read’ users’ minds by capturing and then interpreting facial expressions – such as concentration, anger or confusion. In experiments using actors the computer was accurate 85% of the time although this dropped to 65% with others. The technology raises a number of privacy-related issues, not least the collection of highly-sensitive personal data. Toyota is allegedly already working with Professor Peter Robertson, the inventor, to link the emotional state of drivers with various safety controls and mood sensitive features in cars. Other customers might include insurance companies wanting to crack down on dishonest claims, banks targeting identity fraud, teachers trying to teach (does the student really understand?) or governments wanting to identify terrorists or social security cheats. In the future, car companies or local councils could even tailor road maps or directional signage to the level of aggression of individual drivers. What intrigues me most, however, is whether you could link mood sensitivity to products like radios and televisions so that they tune into ‘happy’ music or programs. There is also the fascinating possibility of online retailers tailoring their home pages, product offerings and even product descriptions to the emotional state of their customers.

If Rupert Murdoch is predicting the end of newspapers as we know them, then we should probably listen. In 1960, 80% of Americans read a daily newspaper. Today the figure is closer to 50% – and falling. Globally circulation is falling too. Between 1995-2003, global newspaper circulation fell by 5%. In Europe the fall was 3%, and in Japan, 2%. Many young people (‘digital natives’ as Mr Murdoch calls them) don’t read a newspaper at all and, if the current trend continues, the last newspaper (probably read by a ‘digital immigrant’) will be thrown into a bin sometime in the year 2040. So what is going on?The explanations are varied and legion. More people are reading news on the Internet, fewer retailers deliver newspapers door-to-door (less children doing paperounds), less people are using public transport (and drive to work listening to the radio instead) and less people are sitting down to breakfast at home (less opportunity to read newspapers). And you can’t just blame the Internet either because the decline in newspaper circulation predates the web. It’s not all bad news though. Some local papers are thriving and in the UK sales of ‘quality’ papers are actually increasing thanks to innovations like compact editions. However, Internet-based news and opinion does have a significant advantage over paper-based products because of functionality and interactivity. Phrases like ‘conversation’ and ‘discussion’ really mean something on blogs because readers can actually contribute. OhmyNews in South Korea, for example, is produced by 33,000 ‘citizen reporters’ and read by 2 million Koreans. Add to this developments like photoblogs, video blogs and podcasting (blogging with sound) and newspapers are looking like yesterday’s news. Incidentally, to put this piece into perspective, it’s interesting to read in Prospect magazine that in 1892 London had 14 evening papers. Now it has just one.

If Rupert Murdoch is predicting the end of newspapers as we know them, then we should probably listen. In 1960, 80% of Americans read a daily newspaper. Today the figure is closer to 50% – and falling. Globally circulation is falling too. Between 1995-2003, global newspaper circulation fell by 5%. In Europe the fall was 3%, and in Japan, 2%. Many young people (‘digital natives’ as Mr Murdoch calls them) don’t read a newspaper at all and, if the current trend continues, the last newspaper (probably read by a ‘digital immigrant’) will be thrown into a bin sometime in the year 2040. So what is going on?The explanations are varied and legion. More people are reading news on the Internet, fewer retailers deliver newspapers door-to-door (less children doing paperounds), less people are using public transport (and drive to work listening to the radio instead) and less people are sitting down to breakfast at home (less opportunity to read newspapers). And you can’t just blame the Internet either because the decline in newspaper circulation predates the web. It’s not all bad news though. Some local papers are thriving and in the UK sales of ‘quality’ papers are actually increasing thanks to innovations like compact editions. However, Internet-based news and opinion does have a significant advantage over paper-based products because of functionality and interactivity. Phrases like ‘conversation’ and ‘discussion’ really mean something on blogs because readers can actually contribute. OhmyNews in South Korea, for example, is produced by 33,000 ‘citizen reporters’ and read by 2 million Koreans. Add to this developments like photoblogs, video blogs and podcasting (blogging with sound) and newspapers are looking like yesterday’s news. Incidentally, to put this piece into perspective, it’s interesting to read in Prospect magazine that in 1892 London had 14 evening papers. Now it has just one.

Historically, politics has been dominated by big ideas. However, that was then and this is now. The last big idea in politics was probably free market economics (Thatcherism and Reganism in the 1980s) but it’s been pretty barren ever since. So it’s nice to hear about an idea from a group of British politicians who think that what’s needed is a plethora of small ideas. The manifesto of small ideas includes: — Compulsory training for teenage fathers — Tax credits for successful marriages — 30 minute traffic-free periods in cities — Any building over 10,000 square meters must include a library — Chief Constables must walk the beat for 4 hours a month. Not quite what some people were thinking of, but at least it’s a start.

Historically, politics has been dominated by big ideas. However, that was then and this is now. The last big idea in politics was probably free market economics (Thatcherism and Reganism in the 1980s) but it’s been pretty barren ever since. So it’s nice to hear about an idea from a group of British politicians who think that what’s needed is a plethora of small ideas. The manifesto of small ideas includes: — Compulsory training for teenage fathers — Tax credits for successful marriages — 30 minute traffic-free periods in cities — Any building over 10,000 square meters must include a library — Chief Constables must walk the beat for 4 hours a month. Not quite what some people were thinking of, but at least it’s a start.

Are we all moving rightwards? A Harvard University poll found that 75% of students supported the armed forces compared with just 20% in 1975. Why the change of heart? First, September 11. Second, the Republican party has spent a lot of time and money recruiting the young and third, young people just love to do the opposite of what their (liberal) parents did. The result is that all sorts of things that would have been considered unthinkable twenty years ago are now perfectly acceptable. For example, 36% of US high-school students now believe that the US government should approve news stories prior to publication or broadcast.

Are we all moving rightwards? A Harvard University poll found that 75% of students supported the armed forces compared with just 20% in 1975. Why the change of heart? First, September 11. Second, the Republican party has spent a lot of time and money recruiting the young and third, young people just love to do the opposite of what their (liberal) parents did. The result is that all sorts of things that would have been considered unthinkable twenty years ago are now perfectly acceptable. For example, 36% of US high-school students now believe that the US government should approve news stories prior to publication or broadcast.

Will national governments survive the current century? There is already evidence emerging that power is shifting towards the local at the one end and the global at the other. We are also witnessing the decline of law and even security at the national level.

Will national governments survive the current century? There is already evidence emerging that power is shifting towards the local at the one end and the global at the other. We are also witnessing the decline of law and even security at the national level.

Do you really care who wins the next election? — aren’t they all the same anyway? Perhaps this is why, in the UK, more people belong to the Royal Society for the Protection of Birds (RSPB) than all three major political parties combined. Take another example. More young people voted on American Pop Idol than in the last US Federal election. But politicians in Lithuania have developed a novel solution – give away drinks at polling stations. At a recent election voting increased from 23% to 65% as a result.

Do you really care who wins the next election? — aren’t they all the same anyway? Perhaps this is why, in the UK, more people belong to the Royal Society for the Protection of Birds (RSPB) than all three major political parties combined. Take another example. More young people voted on American Pop Idol than in the last US Federal election. But politicians in Lithuania have developed a novel solution – give away drinks at polling stations. At a recent election voting increased from 23% to 65% as a result. Historically, international relations have been based on relationships between nation states but this is changing. Many of the current conflicts are between groups inside states. Moreover, the very idea of the nation state is itself under threat from both above and below. Local issues are seen by many people as more important than national politics because at least they have a chance of influencing outcomes. This may lead to the re-birth of city states as national politics is squeezed between powerful multi-national corporate and NGO interests on the one hand and locally politicised individuals on the other.

Historically, international relations have been based on relationships between nation states but this is changing. Many of the current conflicts are between groups inside states. Moreover, the very idea of the nation state is itself under threat from both above and below. Local issues are seen by many people as more important than national politics because at least they have a chance of influencing outcomes. This may lead to the re-birth of city states as national politics is squeezed between powerful multi-national corporate and NGO interests on the one hand and locally politicised individuals on the other.

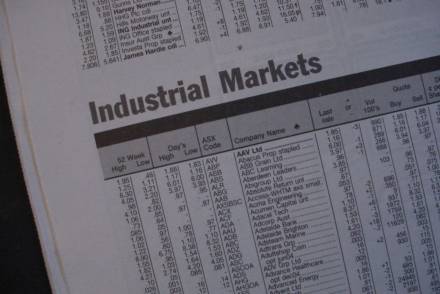

Thirty years ago most Western countries had between 20-40% of their workforces in manufacturing. Now the figure is closer to 2-4%. Have we seen a shift like this before? Yes. During the industrial revolution and, more recently at the end of the 1800s, most people in the US were employed in just three areas: agriculture, domestic service and horse transport. So what are the future implications of a shift from resource based manufacturing to service based and then idea based economies?

Thirty years ago most Western countries had between 20-40% of their workforces in manufacturing. Now the figure is closer to 2-4%. Have we seen a shift like this before? Yes. During the industrial revolution and, more recently at the end of the 1800s, most people in the US were employed in just three areas: agriculture, domestic service and horse transport. So what are the future implications of a shift from resource based manufacturing to service based and then idea based economies?

There is a lot of talk about the growing power and influence of Brazil, Russia, India and China (the so-called BRIC countries) and companies are falling over themselves to invest in China in particular. For example, according to McKinsey Asia (excluding Japan) will represent 30% of global GDP by the year 2025 (Asia is currently 13% and Western Europe currently represents 30% of global GDP). However, this all has a faint smell of history about it since other countries have been hot before (remember Japan, Germany and the so-called Tiger Economies?). To some observers, China and Russia are also built on foundations of sand. Ethnic violence, widespread corruption and speculative building all have the potential to upset this growth trend. Nevertheless, expect a major shift of economic power to the East.

There is a lot of talk about the growing power and influence of Brazil, Russia, India and China (the so-called BRIC countries) and companies are falling over themselves to invest in China in particular. For example, according to McKinsey Asia (excluding Japan) will represent 30% of global GDP by the year 2025 (Asia is currently 13% and Western Europe currently represents 30% of global GDP). However, this all has a faint smell of history about it since other countries have been hot before (remember Japan, Germany and the so-called Tiger Economies?). To some observers, China and Russia are also built on foundations of sand. Ethnic violence, widespread corruption and speculative building all have the potential to upset this growth trend. Nevertheless, expect a major shift of economic power to the East.