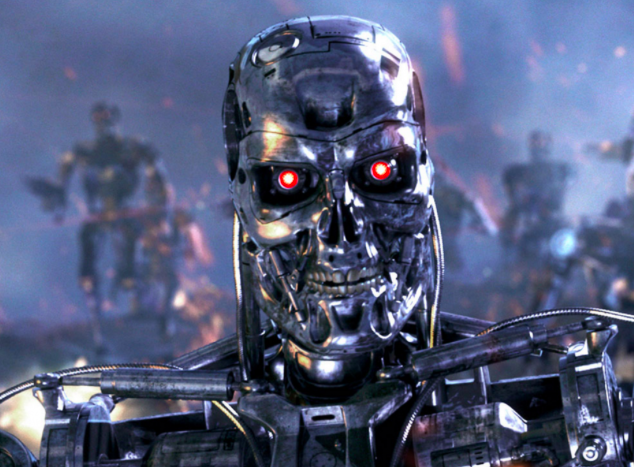

The key argument in favour of autonomous vehicles, such as driverless cars, is generally that they will be safer. In short, computers make better decisions than people and less people will be killed on the world’s roads if we remove human control. Meanwhile, armed robots on the battlefield are widely seen as a very bad idea, especially if these robots are autonomous and make life or death decisions unaided by human intervention. Isn’t this a double standard? Why can we delegate life or death decisions to a car, but not to a robot in a conflict zone?

You might argue that killer robots are designed to kill people, whereas driverless cars are not, but should such a distinction matter? In reality it might be that driverless cars kill far more people by accident than killer robots, because there are so many more of these machines. If we allow driverless vehicles to make instant life of death decisions surely, we must allow the same for military robots? And why not extend the idea to armed police robots too? Same logic.

My own view is that no machine should be given the capacity to make life or death decisions involving humans. AI is smart, and getting smarter, but no AI is even close to being able to understand the complexities, nuances or contradictions that can arise in any given situation.